基于立体视觉的动态鱼体尺寸测量

李艳君,黄康为,2,项 基

基于立体视觉的动态鱼体尺寸测量

李艳君1,黄康为1,2,项 基3※

(1. 浙大城市学院,杭州 310015;2. 浙江大学控制科学与工程学院,杭州 310027;3. 浙江大学电气工程学院,杭州 310027)

获取渔业养殖鱼类生长态势的人工测量方法费时费力,且影响鱼的正常生长。为了实现水下鱼体信息动态感知和快速无损检测,该研究提出立体视觉下动态鱼体尺寸测量方法。通过双目立体视觉技术获取三维信息,再通过Mask-RCNN(Mask Region Convolution Neural Network)网络进行鱼体检测与精细分割,最后生成鱼表面的三维点云数据,计算得到自由活动下多条鱼的外形尺寸。试验结果表明,长度和宽度的平均相对误差分别在4.7%和9.2%左右。该研究满足了水产养殖环境下进行可视化管理、无接触测量鱼体尺寸的需要,可以为养殖过程中分级饲养和合理投饵提供参考依据。

鱼;机器视觉;三维重建;图像分割;深度学习;Mask-RCNN;三维点云处理

0 引 言

鱼体外形尺寸信息尤其是鱼体长度信息不仅反映了鱼的生长状态,还体现出相应的群体特征,对水产养殖有着重要意义。为获得鱼体外形尺寸信息,传统方法通常将鱼麻醉后捞出进行手工测量,不仅费时费力,还会影响鱼的正常生长[1]。目前机器视觉方法已广泛应用于水产养殖的质量分级[2],品种识别[3],计数[4-5],行为识别[6],新鲜度检测[7]等领域。然而,因鱼在水下始终处于游动状态,用视觉方法来无接触获取鱼体尺寸信息具有较大挑战。

国内外学者在基于机器视觉的鱼体尺寸测量上已有一些研究[8-10]。余心杰等[11]搭建了大黄鱼形态参数测量平台,通过相机获取置于玻璃平面上大黄鱼的图像,再人工选取特征点来计算鱼体长度和宽度,实现了0.28%的测量误差。Monkman等[12]采用深度学习模型实现鱼体定位,检测鱼体表面放置的标记块信息得到像素长度和真实长度间的关系并计算鱼长,测量误差在2.2%左右。上述方法基于单目视觉,在固定深度下获取三维信息,一般用于捕获后鱼体尺寸测量,不适合水下动态鱼体信息处理。基于双目视觉的方法,能重构图像的三维信息,可进行自由游动的鱼体尺寸测量。Torisawa等[13]通过直接线性变换从双目图像中获取三维信息,鱼体长度测量误差的变异系数<5%。Muñoz-Benavent等[14]设计了金枪鱼的几何模型,提取鱼头鱼尾的图像特征用于定位模型初始位置,再通过模型匹配实现鱼定位和轮廓提取,90%计算结果的相对误差在3%左右。Pérez等[15]在实验室环境下分别对机械鱼和自由游动的鱼开展了研究,图像经边缘提取和滤波处理后,进行鱼体检测,并通过双目立体视觉计算不同角度的鱼体尺寸,实现了4%左右的测量误差。受限于水下环境的复杂性和鱼的空间分布等因素,基于双目视觉的水下自由游动鱼体测量技术需对采集的图像进行三维重建、鱼体定位、轮廓提取和姿态估计等处理,提升了算法的泛化能力和适用范围,为实现鱼类养殖中的非接触式鱼体大小测量提供了一种有效的手段。

本研究主要基于深度学习和立体视觉,实现不同场景不同种类鱼体尺寸的快速无损测量。在自主搭建的鱼体尺寸测量平台上,开发了水产养殖监控系统和鱼体尺寸计算程序。通过相机标定、立体校正和匹配实现对双目采集图像的三维重建;并制作数据集来训练掩膜区域卷积神经网络(Mask Region Convolution Neural Network,Mask-RCNN)模型,再结合形态学和GrabCut算法实现鱼体检测与分割;根据鱼体分割的三维信息提取鱼表面数据,经坐标变换统一鱼体的方向和位置,计算鱼体长度和宽度信息。该方法为自由活动状态下鱼体尺寸信息的快速自动获取提供了思路。

1 材料与方法

1.1 试验材料与系统搭建

本研究的试验样本选用花鲈15条,体长分布在112.3~141.8 mm;珍珠石斑鱼5条,体长分布在233.0~247.0 mm;鲈鱼5条,体长分布在245.0~290.0 mm。设计了“一桶多鱼”和“一桶一鱼”2 种场景,采用直径1 m、高1 m的圆桶和880 mm×630 mm×650 mm的方形养殖箱作为养殖容器。多条鱼放置于同一养殖桶中的“一桶多鱼”场景与实际水产养殖环境相似,用于获取图像制作数据集,同时验证深度学习模型的检测分割效果。“一桶一鱼”场景是将一条已人工测量尺寸的鱼单独放置于养殖箱中,用于验证鱼体尺寸测量算法准确性。

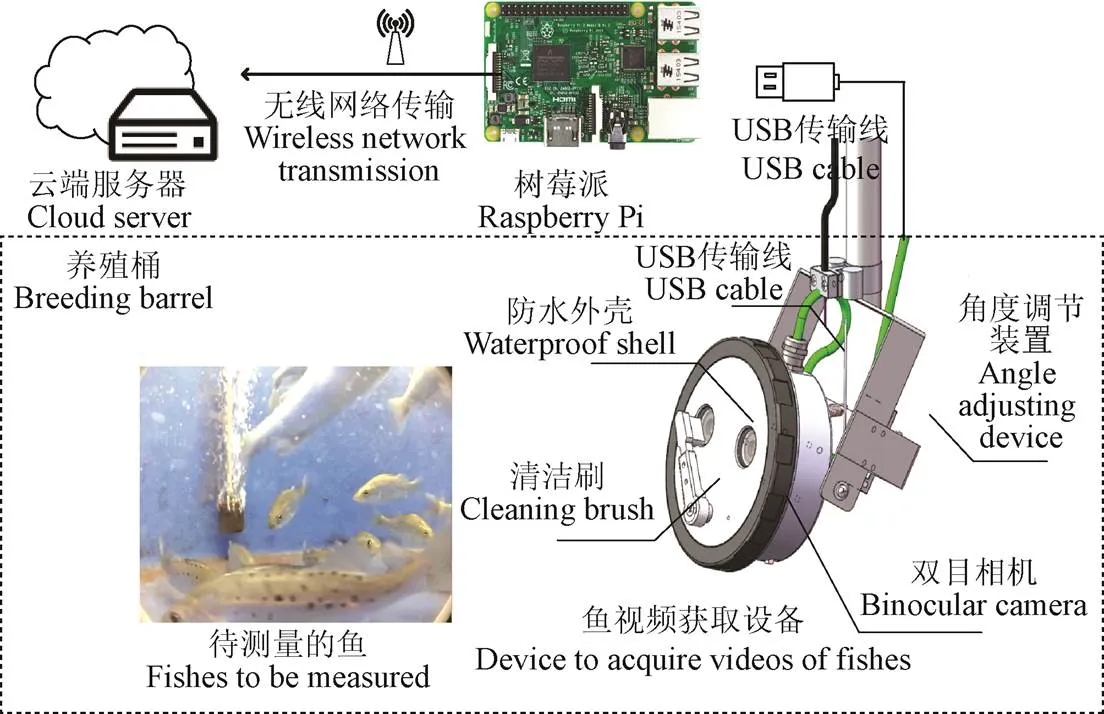

自行研制并搭建的鱼体尺寸测量系统如图1所示。双目相机放置于防水外壳中,通过USB数据线传输鱼的水下视频数据。双目相机分辨率像素为2 560×720,视频采集帧率为30 Hz,基线长度为6 cm。树莓派负责视频流推送。云端服务器配备四路Nvidia GTX 1080 Ti显卡,为深度学习的计算能力提供保障。软件部分主要实现数据采集、传输、计算、结果输出等功能。测量算法由Python语言编写,通过OpenCV计算机视觉库实现图像相关操作,基于Tensorflow的Keras框架实现深度学习模型搭建与训练。

图1 鱼体尺寸测量系统

1.2 立体视觉下动态鱼体尺寸测量方法

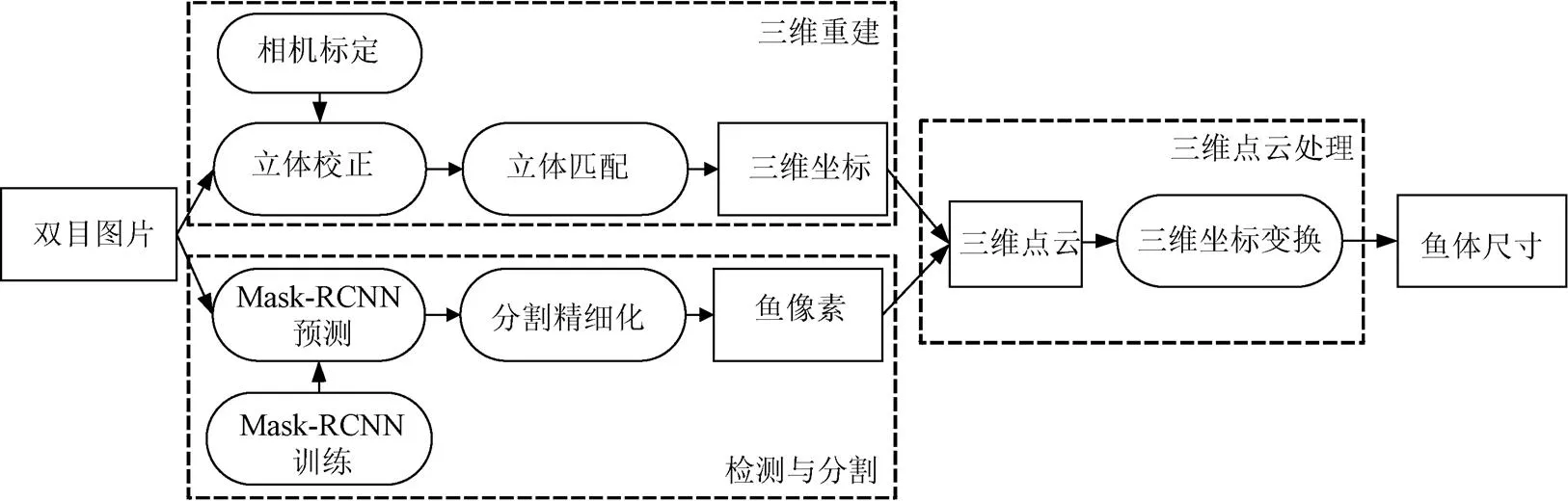

与现有的基于机器视觉的鱼体尺寸测量方法不同,本研究基于立体视觉[16]和实例分割[17-19]等技术实现鱼体尺寸测量,充分利用了图像中的三维信息,适用于实际水产养殖环境下对不同品种的多条鱼进行鱼体尺寸估算。同时,本研究通过平面拟合与椭圆拟合计算点云位姿,实现了对不同位姿的鱼体尺寸计算。算法主要包括三维重建、鱼体检测与分割、三维点云处理3个部分。

1.2.1 三维重建

为从双目图像中获取三维信息,需对场景进行三维重建。先对双目相机进行标定[20],再进行立体校正使图像标准行对齐,然后在校正后的图像上进行立体匹配得到像素对应物点的三维坐标,完成三维重建。

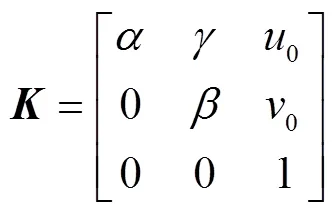

首先,采用张正友标定法[21-22]获取2个相机的内参矩阵和双目间的相对位置。反映了物点的相机坐标系坐标和像点像素位置间的关系,如式(1)所示:

式中(0,0)为相机主点在像素平面坐标;和分别是和轴的比例系数;反映了像素平面轴和轴的倾斜程度。

接着采用立体校正算法[23],使双目成像平面共面且行对齐(标准行对齐)。校正后的双目相机模型如图2所示。

注:pleft和pright分别为双目的左右成像平面;P为物点;z为物点的深度,mm;(ul, vl)和(ur, vr)为物点在2个成像平面上的像点坐标;Oleft和Oright分别为左右相机的主点;t为双目的基线长度,mm;f为立体校正后的焦距长度。

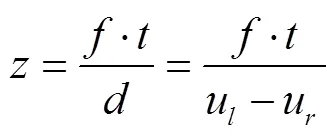

由图2模型可知,物点点的深度通过式(2)计算得出:

式中视差值=u−u为物点在左右成像平面上的像点坐标(u,v)和(u,v)横坐标差值;为双目的基线长度,mm;为立体校正后的焦距长度。

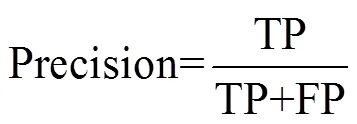

采用半全局块匹配算法(Semi-Global Block Matching,SGBM)[24-25]获取视差值,经立体匹配后得到视差图[26]。在立体匹配过程中,由于遮挡、噪声、单一背景以及视差值过大等原因无法在搜索范围内找到匹配点,无法得到视差值。物点的三维坐标由对应像点在图像中的坐标和视差值计算得到,如式(3)所示:

式中(,,)为物点坐标;为比例系数;()为物点对应像点在左图的坐标;转换矩阵如式(4)所示:

1.2.2 检测与分割

为测量多条鱼的尺寸,需通过目标检测得到鱼在图像中的位置,并获取鱼的分割结果,从而在三维重建中提取鱼的三维点云数据。

与传统图像分割方法相比,基于深度学习的目标检测与分割方法可克服应用环境等因素对检测和分割结果的影响。本研究选取Mask-RCNN[27]网络完成鱼体检测和分割任务,计算出目标边界框和分割的结果。共获取3 712张人工标注图像制作数据集,其中训练集图像2 662张,验证集图像750 张。数据集中只标注鱼尾根部和鼻尖都在图像中且完整无遮挡的鱼。

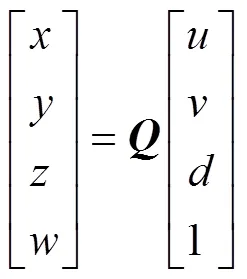

部分Mask-RCNN分割结果在鱼体边缘附近存在偏差,本研究采用GrabCut[28-29]交互式分割算法精炼Mask-RCNN分割结果(图3)。首先,对初始分割进行腐蚀处理,将腐蚀后剩余像素标记为前景;再对初始分割进行膨胀处理,将放大1.1倍的边界框中剩余像素标记为背景;最后,根据标记基于GrabCut算法分割剩余鱼体边缘附近未标记像素。由图3可知,GrabCut能有效地优化分割结果。

图3 GrabCut交互式分割算法优化分割结果

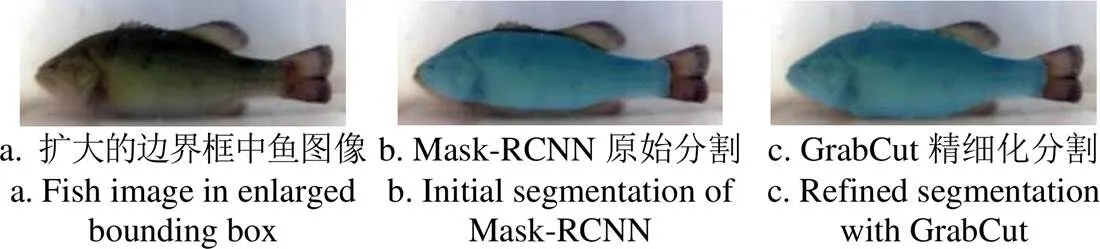

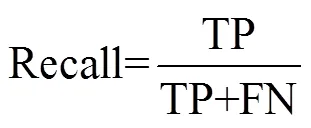

为评价深度学习模型的性能,采用精确率(Precision)和召回率(Recall)来评价目标检测的效果,如式(5)~式(6)所示:

式中TP为真正类,即能和标注框匹配的检测结果数量。FP为假正类,TP+FP即为模型检测出的目标数;FN为假负类,TP+FN为标注的目标数。

采用平均像素交并比[30](mean Intersection Over Union,mIOU)评价分割效果,交并比(Intersection Over Union,IOU)为模型分割结果与标注结果间的交集像素数与并集像素数比值,mIOU为所有预测结果IOU的均值。

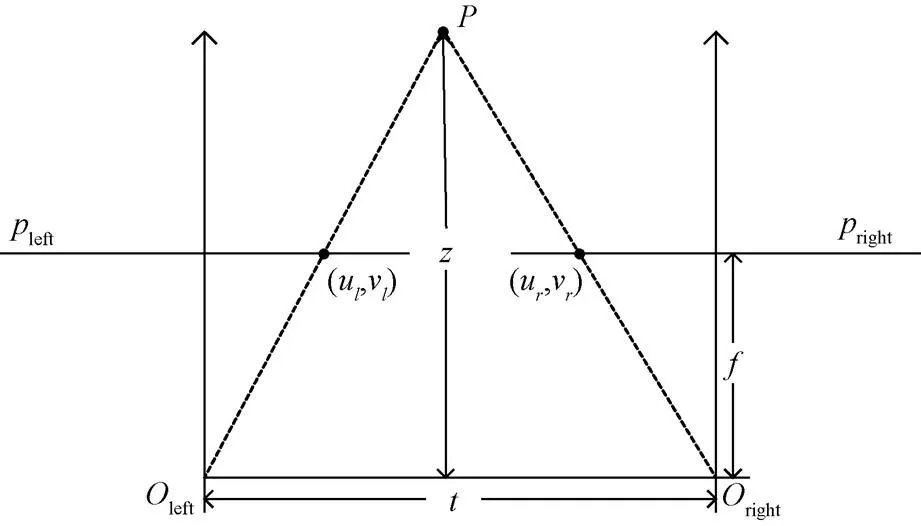

1.2.3 三维点云处理

根据检测与分割结果,在三维重建中提取鱼的三维点云数据。由于检测出鱼的位置和角度具有随机性,需通过一系列坐标变换归一化点云数据。本研究通过2次三维坐标变换,将鱼体中心变换到坐标原点位置,并使鱼体长宽厚方向与3个坐标轴方向一致,再根据新坐标系下点云数据在横、纵坐标轴上的延伸范围,计算鱼体长度和宽度。三维变换计算过程如图4所示。

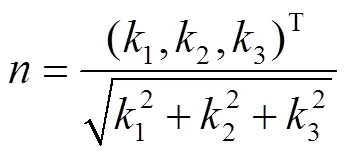

根据分割的结果采取腐蚀操作提取鱼的轮廓,再对鱼体轮廓三维点云数据进行平面拟合(图4a)。设平面方程为1+2+3+1=0,其中1、2、3为拟合系数。采用最小二乘法求解3个拟合系数,可得鱼轮廓所在平面单位法向量,如式(7)所示

注:矩形框为鱼体轮廓点云拟合的平面;轴、轴、轴为坐标变换前的三个坐标轴,mm;′、′轴、轴、轴为第一次变换后坐标系的原点和坐标轴,mm;椭圆由轮廓点云在拟合平面上的投影点拟合得到;″、″轴、″轴、″轴为第二次变换后坐标系的原点和坐标轴,mm。

Note: The rectangle is plane fitted by points clouds of contour;axis,axis andaxis are the axes before first transformation, mm;′,′ axis,axis andaxis are the origin and axes after first transformation, mm; the ellipse is fitted by the projection points of the contour points cloud on the fitting plane;″,″ axis,″ axis and″axis are the origin and axes after second transformation, mm.

图4 坐标变换计算过程

Fig.4 Procedure of coordinate transformations

对原坐标系下所有三维点(,,)T进行坐标变换使得拟合平面为变换后坐标系的′′面(图4a)。变换后的坐标(,,)T与原坐标(,,)T间的关系如式(8)所示

其中′为拟合平面′′上任一点,为该平面上一单位向量,向量和叉乘得到单位向量。

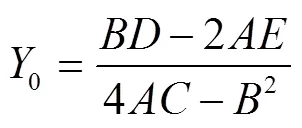

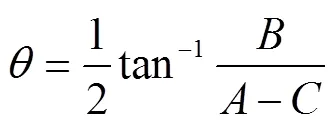

采用一个旋转椭圆拟合某条鱼轮廓点在平面上的投影(图4b)。旋转椭圆方程采用一般二次曲线方程′2+′′+′2+′+′+0,基于椭圆拟合方法[31-32]可得椭圆拟合系数~由式(9)~式(11)得到旋转椭圆的中心坐标(0,0)以及长轴倾角(,rad)。

即得旋转椭圆中心点″(0,0,0)T,长轴方向向量=(sin,−cos,0)T,短轴方向向量=(cos,−sin,0)T。

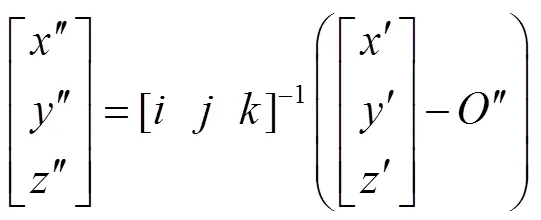

将该条鱼对应点云上所有的三维点(′,′,′)T变换到以椭圆中心″为原点、椭圆长轴方向为″轴、短轴方向为″轴、平面法向量的方向为″轴的新坐标系下,变换关系如式(12)所示,式中向量=(0,0,1)T。

待测鱼体的长度和宽度可分别通过点云数据在″轴和″轴方向上的延伸范围计算得到。

综上所述,立体视觉下动态鱼体尺寸测量方法的整体框架如图5所示。

注:Mask-RCNN为一种用于实例分割的卷积神经网络。

2 结果与分析

根据相机参数和完成训练的Mask-RCNN模型,运用本研究提出方法提取鱼的三维点云数据,通过坐标变换计算鱼体长度和宽度信息,与人工实测结果进行比较,验证本方法的测量精度。

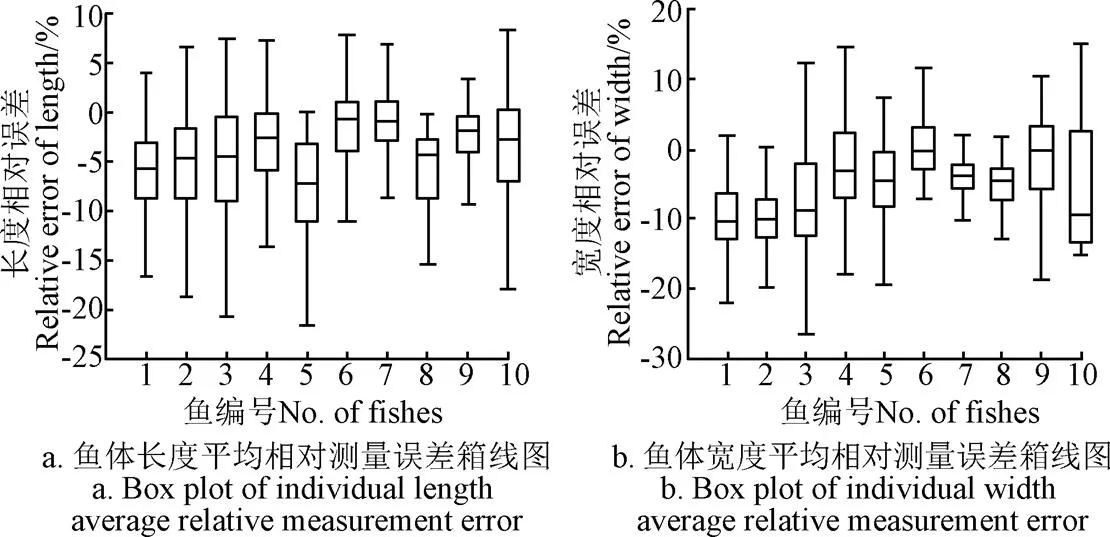

如图6所示的试验结果表明,本方法在鱼体长度和宽的测量上的相对误差分别为4.7%和9.2%。此外,计算结果同人工测量结果相比偏低。经分析,宽度方向误差主要由分割结果在边缘附近部分缺失导致;长度方向误差主要由鱼体弯曲或分割结果部分缺失造成。

注:箱线图横坐标表示不同鱼的编号;每条箱线对应同一条鱼的若干计算结果;箱线的中心矩形代表计算结果的四分位间距;中心矩形内部的横线代表计算结果的中位数;顶部和底部横线代表计算结果的最大最小值。

为验证深度学习模型性能,将置信度阈值设为0.9,由式(5)得到模型精确率为88%,召回率为84%。经过GrabCut精细化分割处理后,mIOU由78%提升为81%。图像的处理速度为2.3 Hz。结果表明所训练的Mask-RCNN网络能实现较好的检测效果,且本研究采用的基于形态学操作和GrabCut算法的分割精细化能够有效提高分割精度。

对不同种类鱼体尺寸测量平均相对误差如表1所示,结果表明所提深度学习模型具有良好的泛化能力,本方法适用于不同种类的鱼体尺寸计算。

表1 不同种类鱼体尺寸计算结果

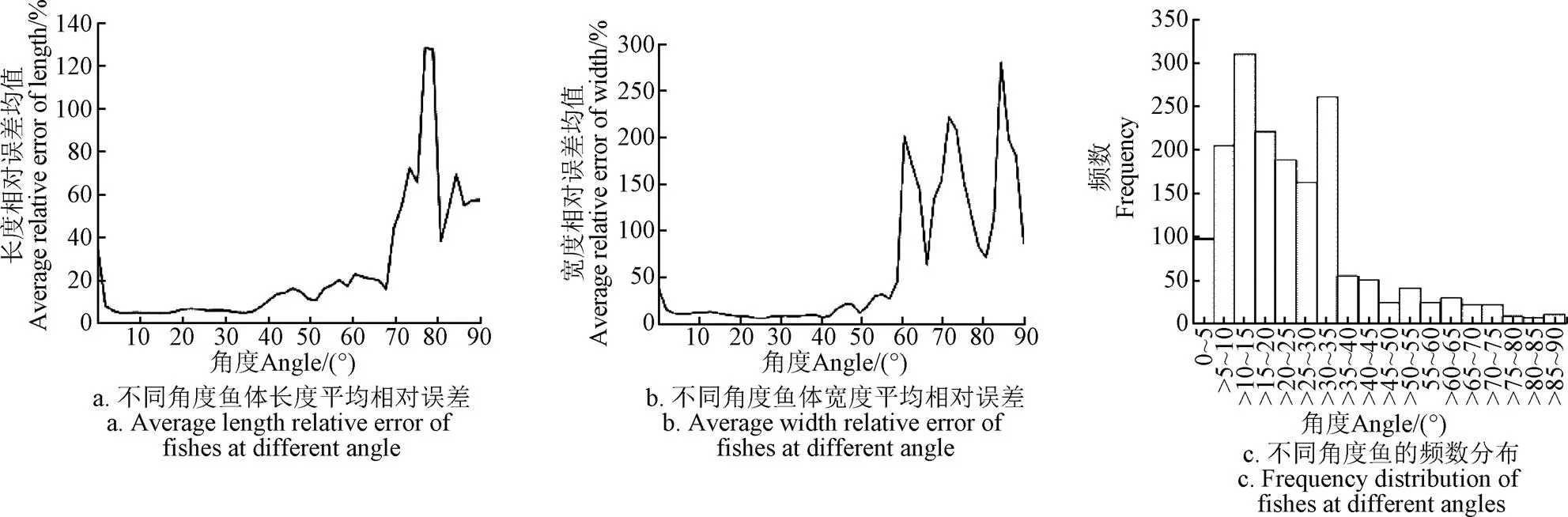

拟合平面和相机坐标系平面夹角可表示鱼游动方向和成像平面间的夹角。统计夹角在各个区间的三维点云平均相对测量误差和夹角的频数分布,结果如图7所示。

图7 不同角度下的长度平均相对误差、宽度平均相对误差和角度频数分布直方图

由图7可知,根据研究的数据集标注方法,85%的点云数据中轮廓拟合平面和成像平面夹角都在0~40°范围内,且该范围内的计算结果准确度较高,>40°时测量结果的平均相对误差会较大幅度增大。结果表明,立体视觉下动态鱼体尺寸测量算法适用于角度在40°内的鱼体尺寸测量,可设置夹角条件进一步筛选点云数据,实现算法检测精度优化。

3 结 论

本研究自行研制并搭建了基于水下双目的鱼类养殖监控系统,提出了基于立体视觉和深度学习的动态鱼体尺寸测量方法,在不影响鱼自由活动的情况下,进行不同种类、不同大小、不同位姿的多条鱼体尺寸测量,得出以下结论:

1)采集了3 712张水下鱼图像,通过多边形标注工具制作水产养殖环境下鱼类分割数据集,训练掩膜区域卷积神经网络(Mask Region Convolution Neural Network,Mask-RCNN)模型,实现鱼体检测与分割。模型在验证集上精确率为88%,召回率为84%。采用GrabCut交互式分割算法在边缘附近精细化处理,使分割结果的平均像素交并比(mean Intersection Over Union,mIOU)由78%提升至81%,提高了分割精确度。

2)采用平面拟合鱼体轮廓的三维点云,将轮廓点云投影到拟合平面上进行旋转椭圆拟合,得到鱼的位置和姿态,将点云坐标变换到以长、宽、厚方向为坐标轴的坐标系下,实现对不同角度鱼体尺寸测量。实测结果表明,成像平面间夹角<40°的鱼体尺寸测量精度较高。

3)计算结果与人工测量结果进行比较,长度测量的平均相对误差为4.7%,宽度测量的平均相对误差为9.2%,计算速度为2 Hz。表明本研究提出的水下游动鱼体尺寸测量方法,具有计算的准确性和快速性以及良好的泛化能力,且体型较大鱼的平均相对测量误差会较低,为水产养殖中游动鱼体尺寸无接触测量提供了可行方法。

[1] Maule A G, Tripp R A, Kaattari S L, et al. Stress alters immune function and disease resistance in chinook salmon ()[J]. Journal of Endocrinology, 1989, 120(1): 135-142.

[2] 张志强,牛智有,赵思明,等. 基于机器视觉技术的淡水鱼质量分级[J]. 农业工程学报,2011,27(2):350-354.

Zhang Zhiqiang, Niu Zhiyou, Zhao Siming, et al. Weight grading of freshwater fish based on computer vision[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2011, 27(2): 350-354. (in Chinese with English abstract)

[3] Rathi D, Jain S, Indu S. Underwater fish species classification using convolutional neural network and deep learning[C]//Ninth International Conference on Advances in Pattern Recognition (ICAPR), Bangalore, India, 2017.

[4] Aliyu I, Gana K J, Musa A A, et al. A proposed fish counting algorithm using digital image processing technique[J]. Abubakar Tafawa Balewa University Journal of Science, Technology and Education, 2017, 5(1): 1-11.

[5] Zhang Song, Yang Xinting, Wang Yizhong, et al. Automatic fish population counting by machine vision and a hybrid deep neural network model[J]. Animals, 2020, 10(2): 364-381.

[6] 张佳林,徐立鸿,刘世晶. 基于水下机器视觉的大西洋鲑摄食行为分类[J]. 农业工程学报,2020,36(13):158-164.

Zhang Jialin, Xu Lihong, Liu Shijing. Classification of Atlantic salmon feeding behavior based on underwater machine vision[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2020, 36(13): 158-164. (in Chinese with English abstract)

[7] Issac A, Dutta M K, Sarkar B. Computer vision based method for quality and freshness check for fish from segmented gills[J]. Computers and Electronics in Agriculture, 2017, 139: 10-21.

[8] 段延娥,李道亮,李振波,等. 基于计算机视觉的水产动物视觉特征测量研究综述[J]. 农业工程学报,2015,31(15):1-11.

Duan Yan’e, Li Daoliang, Li Zhenbo, et al. Review on visual characteristic measurement research of aquatic animals based on computer vision[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2015, 31(15): 1-11. (in Chinese with English abstract).

[9] Hao Mingming, Yu Helong, Li Daoliang. The measurement of fish size by machine vision-a review[C]//International Conference on Computer and Computing Technologies in Agriculture, Beijing, China, 2015.

[10] Saberioon M, Gholizadeh A, Cisar P, et al. Application of machine vision systems in aquaculture with emphasis on fish: State-of-the-art and key issues[J]. Reviews in Aquaculture, 2017, 9(4): 369-387.

[11] 余心杰,吴雄飞,王建平,等. 基于机器视觉的大黄鱼形态参数快速检测方法[J]. 集成技术,2014(5):45-51.

Yu Xinjie, Wu Xiongfei, Wang Jianping, et al. Rapid detecting method for pseudosciaena crocea morphological parameters based on the machine vision[J]. Journal of Integration Technology, 2014(5): 45-51. (in Chinese with English abstract)

[12] Monkman G G, Hyder K, Kaiser M J, et al. Using machine vision to estimate fish length from images using regional convolutional neural networks[J]. Methods in Ecology and Evolution, 2019, 10(12): 2045-2056.

[13] Torisawa S, Kadota M, Komeyama K, et al. A digital stereo-video camera system for three-dimensional monitoring of free-swimming Pacific bluefin tuna,, cultured in a net cage[J]. Aquatic Living Resources, 2011, 24(2): 107-112.

[14] Muñoz-Benavent P, Andreu-García G, Valiente-González J M, et al. Enhanced fish bending model for automatic tuna sizing using computer vision[J]. Computers and Electronics in Agriculture, 2018, 150: 52-61.

[15] Pérez D, Ferrero F J, Alvarez I, et al. Automatic measurement of fish size using stereo vision[C]//2018 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Houston, USA, 2018.

[16] Tippetts B, Lee D J, Lillywhite K, et al. Review of stereo vision algorithms and their suitability for resource-limited systems[J]. Journal of Real-Time Image Processing, 2016, 11(1): 5-25.

[17] Lin Tsungyi, Maire M, Belongie S, et al. Microsoft coco: Common objects in context[C]//European Conference on Computer Vision, NewYork, USA, 2014.

[18] Garcia R, Prados R, Quintana J, et al. Automatic segmentation of fish using deep learning with application to fish size measurement[J]. International Council for the Expoloration of the Sea Journal of Marine Science, 2020, 77(4): 1354-1366.

[19] 邓颖,吴华瑞,朱华吉. 基于实例分割的柑橘花朵识别及花量统计[J]. 农业工程学报,2020,36(7):200-207.

Deng Ying, Wu Huarui, Zhu Huaji. Recognition and counting of citrus flowers basssed on instance segmentation[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2020, 36(7): 200-207. (in Chinese with English abstract)

[20] Yang Shoubo, Gao Yang, Liu Zhen, et al. A calibration method for binocular stereo vision sensor with short-baseline based on 3D flexible control field[J/OL]. Optics and Lasers in Engineering, 2019, 124, [2019-08-20], https://doi.org/10.1016/ j.optlaseng.2019.105817.

[21] Zhang Zhengyou. Flexible camera calibration by viewing a plane from unknown orientations[C]// Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 1999.

[22] 迟德霞,王洋,宁立群,等. 张正友法的摄像机标定试验[J]. 中国农机化学报,2015,36(2):287-289.

Chi Dexia, Wang Yang, Ning Liqun, et al. Experimental reserch of camera calibration based on Zhang’s method[J]. Journal of Chinese Agricultural Mechanization, 2015, 36(2): 287-289. (in Chinese with English abstract).

[23] Fetić A, Jurić D, Osmanković D. The procedure of a camera calibration using camera calibration toolbox for MATLAB[C]//2012 Proceedings of the 35thInternational Convention MIPRO, Opatija, Croatia, 2012.

[24] Heiko H. Stereo processing by semi-global matching and mutual information[J]. IEEE Transactions on Pattern Analysis & Machine Intelligence, 2007, 30(2): 328-341.

[25] Lee Y, Park M G, Hwang Y, et al. Memory-efficient parametric semiglobal matching[J]. IEEE Signal Processing Letters, 2017, 25(2): 194-198.

[26] Ttofis C, Kyrkou C, Theocharides T. A hardware-efficient architecture for accurate real-time disparity map estimation[J]. Association for Computing Machinery Transactions on Embedded Computing Systems (TECS), 2015, 14(2): 1-26.

[27] He Kaiming, Gkioxari G, Dollár P, et al. Mask R-CNN[C]//Proceedings of 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2017.

[28] Rother C, Kolmogorov V, Blake A. GrabCut: Interactive foreground extraction using iterated graph cuts[J]. Association for Computing Machinery Transactions on Graphics (TOG), 2004, 23(3): 309-314.

[29] Li Yubing, Zhang Jinbo, Gao Pengs, et al. Grab cut image segmentation based on image region[C]//2018 IEEE 3rdInternational Conference on Image, Vision and Computing (ICIVC), Chongqing, China, 2018.

[30] Wei Yunchao, Feng Jiashi, Liang Xiaodan, et al. Object region mining with adversarial erasing: A simple classification to semantic segmentation approach[C]// Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017.

[31] Fitzgibbon A, Pilu M, Fisher R B. Direct least square fitting of ellipses[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1999, 21(5): 476-480.

[32] Cho M. Performance comparison of two ellipse fitting-based cell separation algorithms[J]. Journal of Information and Communication Convergence Engineering, 2015, 13(3): 215-219.

Measurement of dynamic fish dimension based on stereoscopic vision

Li Yanjun1, Huang Kangwei1,2, Xiang Ji3※

(1.,310015,; 2.,310027,; 3.,,310027,)

Fish dimension information, especially length, is very important for aquaculture, which can be used for grading and developing bait strategy. In order to acquire accurate information on fish size, the traditional method of measurement has to take the fish out of the water, which is not only time-consuming and laborious but also may influence the growth rates of fishes. In this study, a dynamic measurement method for fish body dimension based on stereo vision was proposed, which could calculate dimension information of multiple fishes simultaneously without restricting their movements. It was implemented and verified by an intelligent monitor system designed and built by ourselves considering the hardware compatibility with satisfied integral performance. Through this system, the videos of underwater fish were captured and uploaded to the remote cloud server for further processing. Then three main procedures were developed including 3D reconstruction, fish detection and segmentation, 3D points cloud processing, which was designed for size acquirement of fishes swimming freely in a real aquaculture environment. In the 3D reconstruction part, in order to acquire the data for modeling, 3D information was restored from binocular images by camera calibration, stereo rectification, stereo matching in sequence. Firstly, the binocular was calibrated with a chessboard to get camera parameters including intrinsic matrix as well as relative translation and rotation of the left and right camera. Then, the captured binocular images were rectified to row-aligned according to parameters of the calibrated binocular camera. Finally, stereo matching based on the semi-global block matching method (SGBM) was applied to extract accurate 3D information from rectified binocular image pairs and achieved 3D reconstruction. In the fish detection and segmentation part, a Mask Region Convolution Neural Network (Mask-RCNN) was trained as a model to locate fishes in the image with a bounding box and extract pixels of fish in each bounding box to get raw segmentation. The raw segmentation was refined with an interactive segmentation method called GrabCut combining with some morphological processing algorithms to correct bias around the edge. In the 3D points cloud processing part, two coordinate transformations were carried out to unify the cloud points of fishes with various locations and orientations. The transformation parameters were calculated based on three-dimension plane fitting of the contour points cloud and rotated ellipse fitting of the transformed points cloud respectively. After transformation, the length and width of the fish points cloud were parallel to axes. Therefore, the length and width of fish were the range of points cloud along the abscissa and ordinate axes. Experiments were conducted using the self-designed system and results including various species and sizes of fish were compared with those of manual measurements. It turned out that the average relative estimation error of length was about 4.7% and the average relative estimation error of width was about 9.2%. In terms of running time, the developed measurement system could process 2.5 frames per second for fish dimensions calculation. The experiment results also showed that the trained Mask-RCNN model achieved the precision of 0.88 and the recall of 84% with satisfied generalization performance. After segmentation refinement, the mean intersection over union increased from 78% to 81%, which exhibited the effectiveness of the refinement method. It also showed that the longer the fish length, the smaller the average relative error of the measurement. These results demonstrated that the proposed method was able to measure multiple underwater fish dimensions based on a stereoscopic vision method by using deep learning-based image segmentation algorithms and coordinates transformation method. This study could provide a novel idea for flexible measurement of fish body size and improve the level of dynamic information perception technology for rapid and non-destructive detection of underwater fish in aquaculture.

fish; machine vision; three-dimensional reconstruction; image segmentation; deep learning; Mask-RCNN; 3D cloud points processing

李艳君,黄康为,项基. 基于立体视觉的动态鱼体尺寸测量[J]. 农业工程学报,2020,36(21):220-226. doi:10.11975/j.issn.1002-6819.2020.21.026 http://www.tcsae.org

Li Yanjun, Huang Kangwei, Xiang Ji. Measurement of dynamic fish dimension based on stereoscopic vision[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2020, 36(21): 220-226. (in Chinese with English abstract) doi:10.11975/j.issn.1002-6819.2020.21.026 http://www.tcsae.org

2020-04-09

2020-06-16

浙江省重点研发计划项目(2019C01150)

李艳君,博士,教授,主要从事智能控制与优化、复杂系统建模等研究。Email:liyanjun@zucc.edu.cn

项基,博士,教授,主要从事水下自主航行器、网络化控制等研究。Email:jxiang@zju.edu.cn

10.11975/j.issn.1002-6819.2020.21.026

TP391.41

A

1002-6819(2020)-21-0220-07