Delta-Davidson method for interior eigenproblem in many-spin systems∗

Haoyu Guan(关浩宇) and Wenxian Zhang(张文献)

School of Physics and Technology,Wuhan University,Wuhan 430072,China

Keywords: numerical exact method,interior eigenvalue,delta function filter,subspace diagonalization

1. Introduction

Computation of eigenvalues and eigenstates of quantum spin systems is an important task in quantum information and condensed matter physics.[1,2]With the full spectrum known, one can trivially explore many interesting properties of the physical systems, such as thermodynamic properties,quantum phase transitions, and evolution dynamics.[3-6]To solve the eigenproblem, direct numerical diagonalization or naively solving the time-independent Schr¨odinger equation of the quantum many-spin systems is typically unfeasible, as physical systems of interest often involve a huge Hilbert space whose dimension grows exponentially with the system size.Even for a modest number of spins, say 20, the full exact diagonalization requires excessive storage and computing time.

Instead of obtaining the full spectrum, one may be only interested in a set of eigenvalues or eigenstates for parts of the spectrum in a certain energy range. For example, the ground state is usually enough to identify a quantum phase transition.[2,7]Due to its great importance and wide application in condensed matter physics, many numerical methods,such as quantum Monte Carlo,[8,9]the traditional density matrix renormalization group (DMRG) method,[10]and matrix product states (MPS)[11]have been developed to solve the ground states of quantum many-body systems,where the latter two are for one-dimensional systems while higher dimensional tensor network states[12,13]are well-established for some twodimensional and ladder systems. It is known that the efficiency of the tensor network approaches is limited to the systems whose ground states must obey the area law.[14,15]For the quantum Monte Carlo method, it suffers from the widely known sign problem,[16,17]which often requires an exponential amount of computational resources to obtain a reasonable accuracy. In practice,the imaginary time propagation and the Lanczos method[18]are also widely used to find the ground states. However, little attention has been paid to the interior eigenvalues and eigenstates.

The interior eigenstates are useful in understanding the universality of the entanglement entropy,[19]the knowledge of eigenstates near the many-body mobility edge helps to locate many-body localization transition,[20,21]and the level spacing statistics in the central spectrum could distinguish quantum chaos from many-body integrable phases.[22,23]It has been shown that both typical excited eigenstates for quadratic Hamiltonians and eigenstates in the thermal phase exhibit a volume law;[19,20]i.e., the von Neuman entropy of the reduced density matrix of a subsystem scales with the subsystem’s volume. Thus, the tensor network approaches are not suitable for solving the interior eigenproblem. To compute the interior eigenstates,a strategy of matrix spectroscopy is often invoked.[24-26]It aims at finding a small set of eigenstates near a certain energy λ.The essential idea may be illustrated by the shift-invert method,[27]which casts the eigenvalues close to λ to the edges of the spectrum of G through a spectral transformation G=(H −λI)−1, where I is the identity matrix and we drop explicitly writing it henceforth. In this way,the original problem is transformed to finding the extreme eigenstates.However,this method suffers the rapid scaling of resources[28]and alternative methods without matrix factorization are explored and discussed below.

Several methods, requiring only matrix-vector products,have been designed to compute interior eigenstates. The Davidson method[29]is a preconditioned version of the Lanczos method, but it can only be effective when the matrix is nearly diagonal.[30]Similarly, the harmonic Davidson method[31,32]is based on a spectral transformation utilizing the harmonic Ritz values, with iterative subspaces of rather high dimension. There is also the filter-diagonalization method,[33-35]which uses short-time propagation with Fourier energy filtering at the desired spectrum. However, the time propagation of states is not necessarily needed and may be replaced by the spectral filters.[36]Hybrid techniques may enhance the efficiency of the methods mentioned above. The idea of hybridizing the Lanczos method or the Davidson method with a spectral filter is thus proposed,where the filter is designed to tune out the undesirable portion of the spectrum,while amplifying the desired portion.Following this idea,several methods have been constructed. The two layer Lanczos-Green function iteration algorithm[25]applies the Dyson expansion of the Green’s function to a Lanczos vector, while running the risk of possible divergence of the Dyson expansion. The Chebyshev filter diagonalization method[37]applies the Chebyshev expansion to the rectangular window function,which may become inefficient in regions with high density of states. Recently a thick-restart Lanczos algorithm with polynomial filtering techniques has been also proposed.[38,39]

In this paper,we propose the delta-Davidson(DELDAV)method that couples the idea of Davidson method with the Dirac delta function, for simultaneous computation of the eigenvalues and eigenstates. While it belongs to the matrix spectroscopy method, the DELDAV focuses on the neardegenerate problem (high density of states). We employ the Dirac delta function filter (delta filter) δ(H −λ) to map the eigenvalues near λ to very large positive values. Such a delta filter has the advantage of efficiently damping the unwanted part of the spectrum and thus greatly accelerating the convergence. The employed Davidson-type methods provide remarkable flexibility in augmenting the basis with new vectors,keeping both the subspace dimension and the reorthogonalization cost small(compared with Lanczos-type methods).[40]For real problems in many-spin systems,the DELDAV method is a very efficient and robust tool. Test cases are presented,with finding 10 eigenstates at the highest density of states region for the Hilbert space dimension up to 106.

The remainder of the paper is organized as follows. We briefly review the Chebyshev-Davidson method in Section 2,to introduce the basic idea of the filtration and the subspace diagonalization. The formalism of the DELDAV method and its applications to the quantum spin models are given in detail in Section 3. In Section 4,we describe two specific many-spin models and present the results of our numerical experiments.A conclusion is given in Section 5.

2. Review on Chebyshev-Davidson method

The Chebyshev-Davidson (CD) method is a Davidsontype subspace iteration using Chebyshev polynomial filters for a large symmetric/Hermitian eigenvalue problem.[40,41]This method combines the acceleration power of the Chebyshev filtering technique and the flexibility and robustness of the Davidson approach.

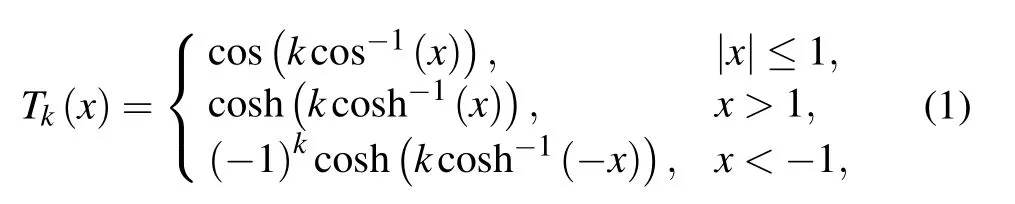

A typical filter in the CD method is based on Chebyshev polynomials.The k-th order Chebyshev polynomial of the first kind is defined by

with initial conditions T0(x)=1 and T1(x)=x.[42]Note that the higher order of the polynomials can be efficiently determined by using the 3-term recurrence

A remarkable property of the Chebyshev polynomial is its rapid growth outside the interval [−1,1], as illustrated in Figs.1(a)-1(c)and in Ref.[41].

For a Hamiltonian H with a spectrum bounded in[Emin,Emax],where Eminis the minimum energy and Emaxthe maximum energy,a Chebyshev filter is designed to amplify the components of the eigenstates corresponding to eigenvalues in the interval [Emin,a] and to simultaneously dampen those in the interval[a,Emax],provided a >Emin.[41]To satisfy this goal,one only needs to map[a,Emax]into[−1,1]by shift and normalization,

The effect of Chebyshev filtering is

with |ψ(0)〉 a random initial state, |φi〉 the i-th eigenstate, cithe random coefficients of the initial state, Eithe i-th eigenvalue of H,and

Fig.1. Chebyshev filter Tk(x)for k=20(a),40(b),and 80(c)and delta filter δk(x)(the k-th order Chebyshev expansion of the Dirac delta function)for k=20(d),40(e),and 80(f). Note the spikes both outside[−1,1]of the Chebyshev filter and around the center of the delta filter. (g)Comparison of the damping effect of the Chebyshev filters(dashed lines)and the delta filters(solid lines)with polynomial orders k=20(red lines),40(blue lines),and 80(green lines). For the Chebyshev filter γ =Tk(−2+x)/Tk(−2)and for the delta filter γ =δk(x)/δk(0). Both filters decay roughly in an exponential form. The horizontal black dotted line denotes the half maximum of the filters. Inset reveals the inverse relation between k(x-axis)and half width at the half maximum(y-axis)for the delta filters(solid line with squares)and the Chebyshev filters(dashed line with circles).

After the Chebyshev filtering,one may construct the subspace as follows:

where|ψ〉is usually a randomly initialized state and Gi=G,with the boundary a been adjusted appropriately for each Gi.[41]Whenever a new state is generated,one shall orthonormalize it against all existing states,in order to keep the d states in Kdas an orthonormalized basis. All one needs next is to calculate the representation matrix R of the Hamiltonian in this special subspace and to diagonalize it directly. The eigenstate corresponds to the largest eigenvalue of R is then utilized to construct a better approximation of the ground state of the original Hamiltonian H.

3. Delta-Davidson method

Although successful in finding the extreme states and many nearby states, the CD method is unable to find the interior states that are far from the extreme ones. In fact, the CD method employs either a low-pass filter or a high-pass filter. To find the interior states,one actually needs a band-pass filter. We thus introduce the delta filter, which is obviously band-passing,to replace the Chebyshev filter.

We construct a delta filter δ(H −λ)to amplify the components of the eigenstates corresponding to an energy close to λ. In other words, the original interior spectrum of H is transformed to the extreme spectrum of δ(H −λ). The delta function is a natural choice to do this,for it possesses a rapid growth in an infinitely small region centered at λ. Contrast to the Chebyshev filter which aims at amplifying the extreme region, the delta filter may well amplify the extreme or interior region by adjusting λ appropriately.

Many other filters, like Green’s function filter (H −λ)−1[25,26]or Gaussian filter exp[−(H −λ)2], may do the same job. However, considering the highly near-degenerate central states in a many-spin system where the Hilbert space dimension may reach several millions, we need an extremely narrow band filter,where the band width is defined as the full width at half maximum. In fact,the narrower the band width,the better the filter effects,because a smaller band width filter dampens the unwanted part more rapidly. On the other hand,the most efficient expansion of a nonperiodic function among all polynomials is given by the Chebyshev polynomial.[43]In consideration of this fact,we numerically find that the delta filter works the best(having the smallest band width)among the above filters under the same order of Chebyshev expansion.

Besides, there is also a straightforward transformation(H −λ)2being widely used to cast the interior spectrum to extreme. In fact, this is nothing but the second order Chebyshev expansion of the delta function, ignoring the constant term. However, this transformation is not a good candidate solution when facing the highly near-degenerate problem. After the transformation, it is much harder to distinguish those neighboring eigenstates. For example, an original level spacing 10−7of H is transformed to 10−14of H2(suppose H is rescaled to unitless for simplicity). Whereas with the delta filter one can choose a proper expansion order to fit in a tiny region of spectrum and to significantly amplify the level spacings at the same time,as shown in Figs.1(d)-1(f). Below,we describe the specific details of the application of Chebyshev polynomial expansion to the delta filter.

is definitely bounded by −1 and 1. For quantum spin systems,the Hamiltonian H is bounded both from above and from below,thus the rescaled operator G can readily be found.

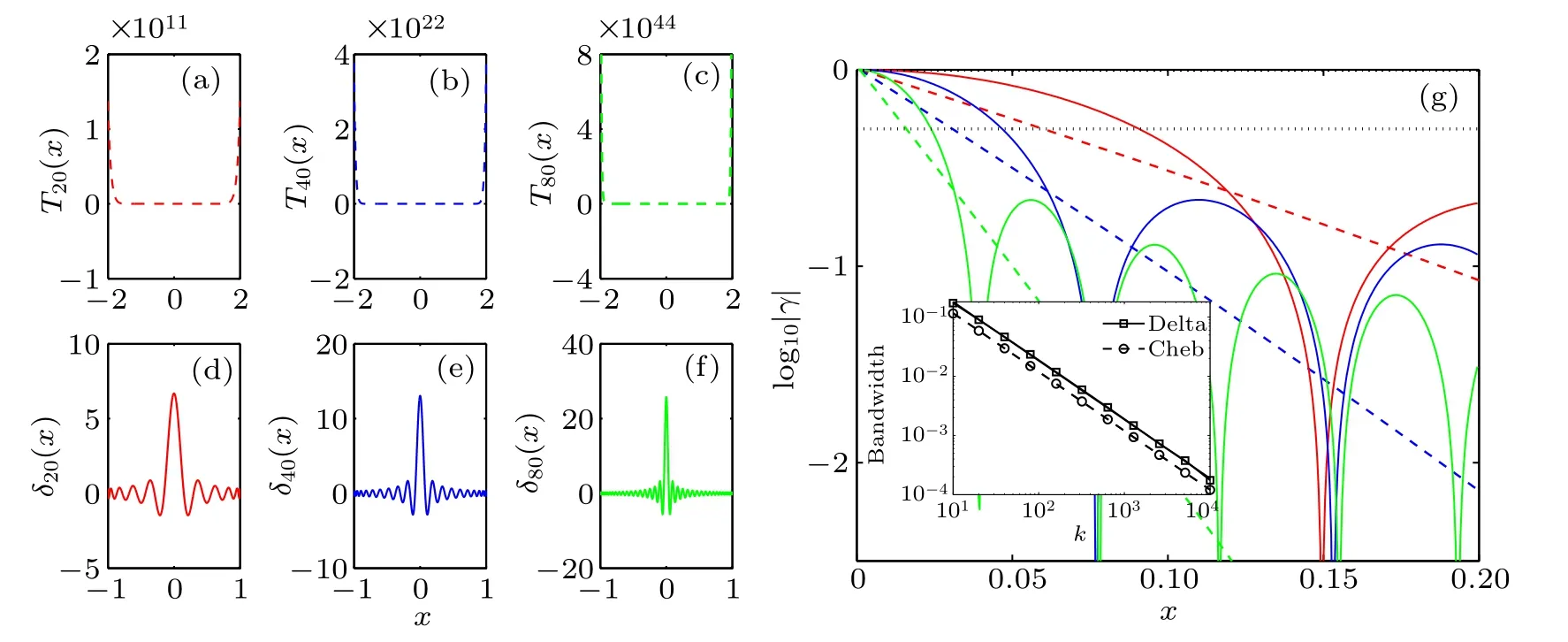

The Chebyshev polynomial expansion of the delta filter now becomes

with λ′=(λ −Ec)/E0and we ignore the constant factor 1/E0in Eq. (7) henceforth. The expansion coefficients ck(λ′) can be calculated using the orthogonal property of the first kind Chebyshev polynomials

where ak=1 for k=0 and ak=2 for k ≥1. With the initial conditions T0(G)=1 and T1(G)=G,the k-th order Chebyshev polynomial can be efficiently determined using the recurrence relation Eq.(2). The filtered state|ψ′〉=δ(G−λ′)|ψ〉can be calculated by summing successively the terms of the series in Eq.(7)until a predefined value K of k is reached. Note that as shown by different curves in Figs. 1(d)-1(f), a larger K corresponds to a smaller filter band width. As shown in the inset of Fig.1(g), the band widths of both the Chebyshev filters and the delta filters are close to each other and inversely proportional to the expansion order k.

However, we remark that in practice the highly neardegenerate eigenvalues still remain a severe problem, even with the help of delta filtering. Generally speaking, in a quantum N-spin system the energy bounds grow polynomially with N while the number of eigenvalues grows exponentially,thus the level spacings roughly decrease exponentially as N increases.[44]For a typical Ising system with a size N=20,the level spacings of the rescaled operator G at the central region may be as small as 10−7(note that G is unitless and bounded by −1 and 1).This is not surprising,for there are 106eigenvalues inside[−1,1],giving an average level spacing 2×10−6.To amplify the components of the wanted eigenstates and dampen the others, the cutoff expansion order K ≃107is required.Such a requirement already implies that 107matrix-vector operations are needed, not to mention the potential necessity of the repeated filtering iterations. Therefore, for 20-spin systems it is almost impossible to find the central region of the spectrum by the delta filtering alone.

The subspace diagonalization, combined with the delta filtering technique, resolves this problem. Since it is hard to cope with the extremely tiny level spacing, one may amplify a cluster of states simultaneously (corresponding to a larger bandwidth)to relax the requirement of large K. For example,to amplify 10 states simultaneously requires K ≃106instead of K ≃107in the above case. Note that after the delta filtering, the state becomes more concentrated in a small energy interval and is closer to the true eigenstate. Furthermore,there is an elegant way to extract additional eigenvalue information from this filtering sequence of states,as indicated by the faster convergence of the Lanczos method compared to the power method.[30]We then construct the Krylov subspace Kd,which is formed by a set of states after iterations of the delta filtering,as follows:

To summarize, simultaneously amplifying a cluster of states reduces the order K while the subspace diagonalization scales down the iteration number of filtering, leading to a tremendous speed-up of the convergence (see also Appendix A). The subspace diagonalization is applicable to the degenerate,near-degenerate,and non-degenerate cases.In this sense, the subspace diagonalization is an essential ingredient of the DELDAV method.

By combining the delta filter and the subspace diagonalization, the explicit algorithm of the DELDAV method is as follows.

(ii)Choose an initial random normalized trial state|ψ0〉,set|φ0〉=|ψ0〉,V0=[|ψ0〉],and W0=[|φ0〉].

(iii)For n=1,2,...,nmax,do the following iteration steps to refine the trial state.

(a)Generate a new normalized trial state through the delta filtering

where β is a normalization factor. Then construct the 2N×(n+1)matrix Vnas

and note that span(Vn)=Kn+1.

(b)Orthonormalize|ψn〉against the base states of the orthonormal matrix Wn−1,which results in the state|φn〉. With|φn〉we construct the matrix Wnas

The orthogonalization is performed by the method developed by Daniel-Gragg-Kaufman-Stewart, which has an appealing feature of being numerically stable.[45]

(c) Compute the subspace projection matrix Rnof the shifted Hamiltonian

Then compute the eigen-decomposition of Rnas

with Λna diagonal matrix and Ynstoring the normalized eigenstates of Rn.Sort the eigenpairs of Rnin non-decreasing order by the absolute value of eigenvalues.

(d)Refine the basis matrix Wnby subspace rotation

(e)If the dimension of Wnequals the parameter d,throw away the last few states of Wn. Then check if the first few states of Wnare converged and count m, the number of converged states. If m ≥kwantor n=nmax,stop the iteration loop,otherwise continue it.

The DELDAV method is applicable to find both the extreme and the interior states. For the extreme states, we estimate the Eminand Emaxin the beginning, by employing the upper-bound-estimator, which costs little extra computation and bounds up the largest absolute eigenvalue.[46]The estimator gives an initial guess of max(|Emin|,|Emax|), then we set Emaxas it while Eminnegative of it. For this setting we have utilized the symmetry of the DOS, a bell-shape profile centered at zero,in the many-spin systems. It is important that Eminand Emaxmust bound all eigenvalues both from above and from below. Otherwise, eigenstates with the largest absolute eigenvalues may also be magnified through the delta filtering,which leads to the failure of the DELDAV method. For the interior states,we directly input the previously found Eminand Emaxsince typically they are quite easy to be searched for.

We note that the DELDAV method inherits the remarkable flexibility of the Davidson-type methods,which is beneficial to save memory and reduce orthogonalization cost.Therefore, we employ both the inner-outer restart technique,[40]which relaxes the requirement for memory, and the block filter technique, which means several states are filtered during a single iteration, in programming of the DELDAV method.This approach has been proved in Ref.[40]to converge rapidly with d=kwant+c,where c is a positive integer,in the tests of the CD method. Such a requirement of the subspace dimension is different from that of the implicitly restarted Arnoldi method (ARPACK),[47]which needs d ≈2kwantto compute kwanteigenpairs efficiently.

4. Numerical results

We apply the DELDAV method to the eigenvalue problem in quantum spin-1/2 systems containing two-body interactions. Such systems are good models for investigating a large class of important problems in quantum computing,solid state theory, and quantum statistics.[1,2,4]Exact eigenvalues and eigenstates help us in understanding the physics behind the complicated model and often serve as a benchmark to evaluate other approximate methods as well.

In general,the system consists of N spins and M pairs of coupling,where M ranges from 1 to(N2−N)/2. The Hamiltonian is given by

We specify the above many-spin model by two typical physical systems. One is the disordered one-dimensional transverse field Ising model,[49]where the Hamiltonian is

Another system is the spin glass shards,[23]which represents a class of global-range interacting systems that require relatively large bond dimensions to be tackled by the DMRG methods.[50]The Hamiltonian describing the system is

Despite the asymptotic advantages of the Chebyshev expansion and the small band width of the delta filter, it is not a priori clear if the DELDAV method is efficient for the real physical systems. We perform numerical tests to present the correctness,efficiency,and numerical robustness of the DELDAV method. All the timing information reported in this paper is obtained from calculations on the Intel(R)Xeon(R)CPU E5-2680 v3,using sequential mode.The convergence criterion is set as‖r‖<ε with ε =10−10.

We set kwant=10,i.e.,to find out the 10 eigenstates with eigenvalues closest to a given λ. In fact, we are able to calculate hundreds of eigenvalues with limited extra time consumption. For example, for the 15-spin Ising model, we are able to calculate 200 eigenpairs in 5278 CPU seconds versus 10 eigenpairs in 1408 CPU seconds. But for simplicity we consider the computation of 10 eigenpairs henceforth. The ground cluster indicates the lowest 10 eigenpairs while the central cluster indicates the 10 eigenpairs closest to λ =0.

Fig.2. The relative error of the 10 converged states in ground clusters(red lines with diamonds),central clusters(green lines with circles),the 1σ clusters (blue lines with squares), and the 2σ clusters (black lines with asterisks), is shown for(a)Ising model with N =20 and(b)spin glass shards model with N =13. The ground clusters are ordered by eigenvalues while the others by the distance between λ and eigenvalues, in an increasing order. Insets present the normalized DOS for the systems and the location of different λ’s (vertical dashed lines). The x-axis of the insets is the system energy measured by Γ,and the y-axis the normalized DOS.

We present in Fig.2 the relative error η = |(E −Eexact)/Eexact|of the computed eigenvalues for the Ising model with N =20 and the spin glass shards with N =13, where E indicates the certain eigenvalue computed by the DELDAV method. The x-axis is the index of the converged states in the 10-eigenstate cluster. Full exact eigenvalues of both systems have been obtained by other reliable methods. For the Ising model,the Jordan-Wigner transformation is used,while the spin glass shards is fully diagonalized through the subroutine ZHEEVR in LAPACK.[51]For each system, four 10-eigenstate clusters located at the regions with different local DOSs are calculated by our method, as shown in the insets in Fig.2. For the three interior clusters,λ is chosen as 0,1σ,and 2σ,where σ is the standard deviation of the Gaussian-like distribution of the DOS.

Clearly, our results for both systems agree well with the exact results at different λ’s with various local DOSs,including the extreme and the interior states (especially the central states). Note that although the absolute errors converge to a similar level, the relative errors η for the central states show different behavior. The relatively large η for the central states is due to the smallness of Eexact, which becomes extremely tiny for large N. In fact,for the Ising model with 20 spins,the exact eigenvalues of the Hamiltonian H at the central region are about 10−5Γ,while for the spin glass shards with 13 spins they are about 10−3Γ.

Fig.3. Convergence processes measured by the residual norm for the computation of a 10-eigenstate cluster in Ising model (blue solid lines with squares)and spin glass shards(red dashed lines with circles). (a)Chebyshev filtering process for ground clusters,both systems are of size N=25;(b)delta filtering process for central clusters,with system size N=20 for Ising model and N=19 for spin glass shards. Each line corresponds to the convergence process of an eigenstate or a pair of close eigenstates(near-degenerate),since close eigenstates may converge simultaneously.

We present in Fig.3 the convergence processes of the CD method for the ground clusters and the DELDAV method for the central clusters. The x-axis shows the number of iterations,and during each iteration there is a filtration either by the Chebyshev filter or by the delta filter,followed by a subspace diagonalization. The iterative filtering implies an effectively growing k in Eq.(4)for both filters. The y-axis represents the energy error bound for the state which is currently the closest to converge. We note that the eigenstates situated closer to λ (λ ≤Emaxfor calculating the ground clusters)would show a faster convergence rate, thus the various convergence lines are ordered by the distance between the eigenvalues and λ.One may view the convergence process as a special dynamical evolution that concentrates the initial random wave function,which distributes widely in energy basis, into a sharp wave packet around λ.

We set K =50 for the Chebyshev filter in both systems.The straight lines in Fig.3(a) confirm the exponential damping by the Chebyshev filtering,as also shown in Eq.(4). However,after the fast convergence of the first two near-degenerate states,the residual norm jumps to a rather high value.[41]This sudden change might be due to the over-damping of the unconverged states. For the small components, the numerical error will run in and be amplified after the orthonormalization.One thus needs to amplify the components of the nearest to converge states again through Chebyshev filtering in the following iterations.

For computation of the central clusters, we employ the DELDAV method for both spin systems since the CD method is not capable to search these eigenvalues. For the delta filters, we set K =80000 in the Ising model and K =50000 in the spin glass shards. The huge increase of K, compared to the Chebyshev filters, comes from the high local DOS in the central region(see the insets in Fig.2). In general,a high local DOS means that more states are squeezed in an energy bin(near-degenerate) and the average level spacing is small. In order to discriminate these states, one needs a much smaller bandwidth filter,thus a very large K.

The convergence processes for the central clusters are shown in Fig.3(b). Those two curves clearly show three stages. The first stage exhibits a fast decay of the residual norm, because the components of the eigenstates with eigenvalues far from λ diminish quickly after the delta filtering,as shown in Figs. 1(d)-1(f). The second one is, however, a plateau. We suppose the slowdown of the convergence inside the plateau might originates from the flatness of the delta filters nearby λ. Without the subspace diagonalization, the plateau would persist for a rather long time, as further confirmed in Appendix A.It is interesting that the third stage shows an exponential decay of ‖r‖ (see the two insets), which is similar to the behavior in Fig.3(a). Obviously, the fast decay in this stage is due to the combined effect of the delta filtering and the subspace diagonalization,which is the essential idea of the Davidson-type methods.

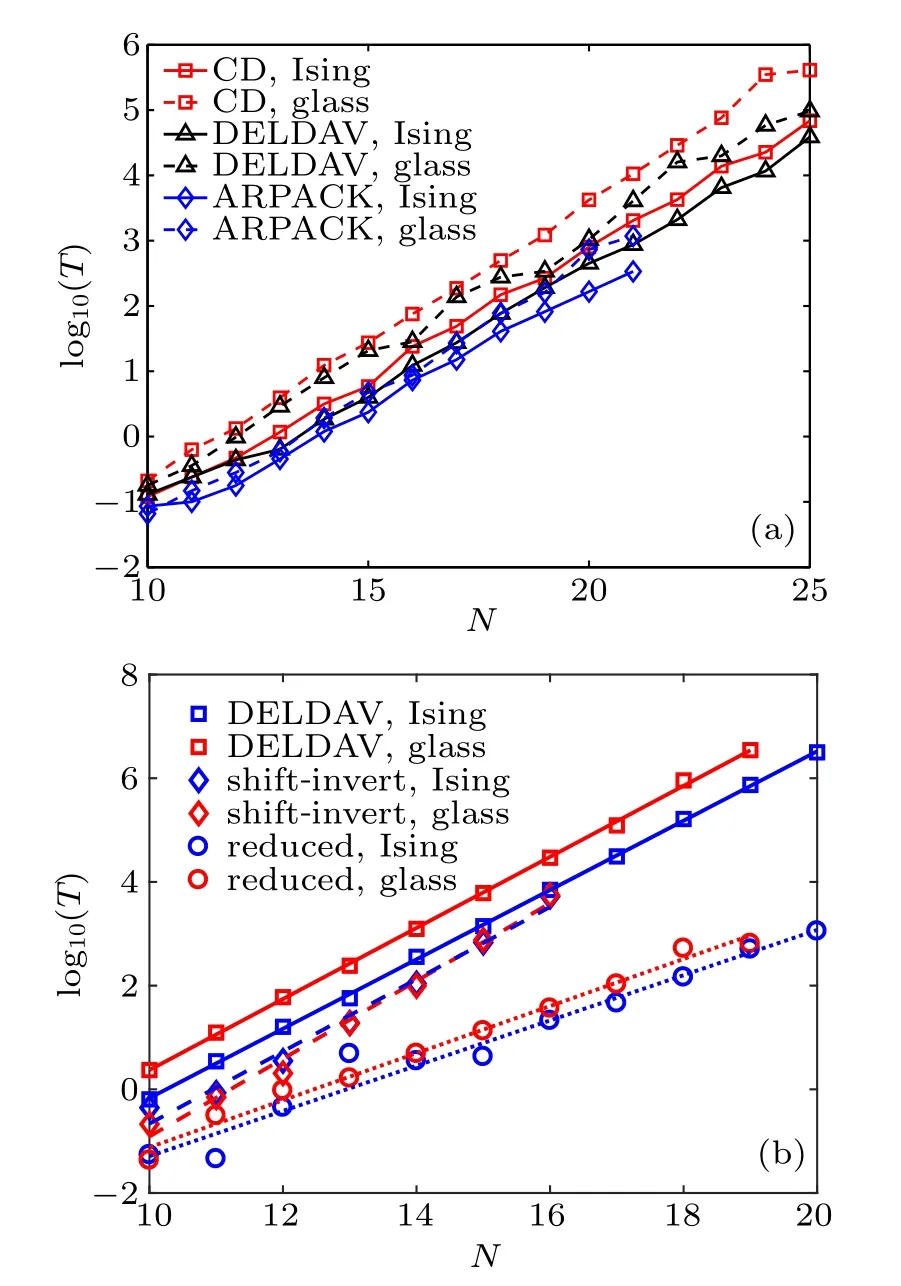

The scaling behaviors of the DELDAV method for finding the ground and central clusters of the Ising model and the spin glass shards are presented in Fig.4. For the ground clusters, we also present scaling lines of the CD method and ARPACK[47]for comparisons. ARPACK is one of the most robust and efficient eigensolvers in practice.[52,53]It provides the matrix-vector products mode for extreme cluster calculations and often serves as a benchmark. We define the convergence time T as the CPU time (seconds) for calculating a 10-eigenstate cluster.As shown in Fig.4(a),the scaling of runtime versus system size between these methods is comparable.In detail,ARPACK costs the least CPU time to converge,followed by the DELDAV method while the CD method performs the worst. All three methods have similar memory consumption since only the matrix-vector products are required.

Fig.4. Scaling behavior measured by the convergence time T (CPU seconds)versus system size N. (a)Comparison of the CD method(red lines with squares), the DELDAV method (black lines with triangles),and ARPACK (blue lines with diamonds) for calculating the ground clusters of the Ising model (solid lines) and of the spin glass shards(dashed lines). All of them share a similar exponential growth with N.(b)Scaling lines of calculating the central clusters, with the DELDAV method(solid lines with squares),the shift-invert method(dashed lines with diamonds)and the reduced scaling lines of the DELDAV method(dotted lines with circles)for the Ising model(blue)and the spin glass shards (red). All these lines are obtained via linear fitting. The shiftinvert method possesses the maximum slope. The reduced scaling lines are close to those of(a).

We note, however, that both the CD method and ARPACK are not suitable for solving interior eigenstates. As mentioned before,the CD method cannot directly find out the interior eigenstates. When combined with the transformation(H −λ)2, the tiny level spacings (10−7for G) at the central region are squared (10−14for G2). Roughly speaking,it means 107times longer for the convergence, which is an unacceptable long time. In finding the interior eigenstates,ARPACK provides only the shift-invert mode instead of the matrix-vector products mode. This mode is essentially the shift-invert method,i.e.,replacing the original Hamiltonian H by its inverse H−1. The shift-invert method is widely used in calculating eigenpairs at the middle of the spectrum,to name a few,like in Refs.[28,54-56].However,for large spin systems,the matrix sizes are extremely huge and one will quickly run out of memory when calculating the inverse of the Hamiltonian. On the contrary, the DELDAV method is applicable on the full spectrum while needing only matrix-vector products,which does not require an explicit Hamiltonian matrix representation.

For the central clusters, we present in Fig.4(b) the scaling lines of the DELDAV method for both the Ising model and the spin glass shards. Similarly, the DELDAV method exhibits an exponential scaling. However, the scaling line slopes of the central clusters are far higher compared to that of Fig.4(a). To compare quantitatively,we extract the scaling constants α by fitting the numerical results,where α is defined by T =T0exp(αN). The values of α are shown in Table 1 for the ground and central clusters. One may observe that α in Fig.4(b)are indeed apparently larger than those in Fig.4(a).

Table 1. Scaling constants α by exponential fitting of the DELDAV curves in Fig.4.

In Fig.4(b)we also present the scaling lines of the shiftinvert technique combined with ARPACK in computing the central clusters for comparison. The extracted scaling constants of the shift-invert method are α = 1.61 for the Ising model and α = 1.73 for the spin glass shards, which are slightly higher than those in the DELDAV method. We note,however,that the LU decomposition(factorization)dominates the computation time for the shift-invert method when the system size N ≥18.[28]Thus for large systems its convergence time is approximately proportional to the cube of matrix size,[57]giving a scaling constant α ≈2.08. Considering the similar convergence time of both methods for N=16 systems, it is evident that the DELDAV method converges faster for large enough spin systems. In addition, the shift-invert method requires 98 GB memory for N =16 systems. Such a requirement is much larger than that of 89 MB memory by the DELDAV method for the same system. Restricted by the memory capacity,with the shift-invert method we cannot perform calculations for system size N ≥17. These comparisons clearly indicate the huge advantages of the DELDAV method over the shift-invert method for large spin systems.

We present in Fig.5 both the convergence time of the DELDAV method and the local DOS for the Ising model and the spin glass shards. We have performed two tests with various λ’s, the first for the Ising model with 15 spins while the second for the spin glass shards with 13 spins. The DOSs for both systems are statistically constructed by their entire exact eigenvalues. Clearly, figure 5 shows that the DELDAV method is much more efficient in finding the interior states on the edge of the density profile than the central ones.It also implies a proportional relationship between the local DOS and the convergence time. This relation suggests that the DELDAV method indeed has similar efficiency in finding the interior states(including the central ones)and the extreme states,in the sense that the local DOS being the same.

Fig.5. The convergence time (blue squares) of the DELDAV method for computing a variety of eigenvalue clusters and the local DOS(green dashed lines) for a 15-spin Ising model (left) and for a 13-spin glass shards (right). Obviously, the convergence time is proportional to the local DOS.

Besides the DOS,many other factors in practice may have influence on the convergence time and efficiency of the DELDAV method. Two factors,the subspace dimension d and the cutoff order of Chebyshev expansion K,are controllable in the numerical calculations. In Fig.6, we present the dependence of the convergence time on d and K. The tests are performed for finding the central cluster in the 15-spin Ising model,with all parameters the same except for d and K. In the small d limit, we notice that a larger K greatly speeds up the convergence of the program. While in the large d limit, the convergence time is nearly independent of d and K, indicating the robustness of the DELDAV method. This also suggests one to choose d =100 when 10 eigenvalues are wanted, in order to converge quickly and to save memory at the same time.

Fig.6. The convergence time of the DELDAV method versus the subspace dimension d, with the cut off orders K = 1000 (red line with triangles), 2000 (blue line with diamonds), 5000(pink line with asterisks),and 10000(black line with squares). The tests are performed for calculating the central cluster in a 15-spin Ising model. The robustness of the DELDAV method is revealed,as the convergence time is almost independent of d and K in a certain wide region.

5. Discussion and concussion

In conclusion,we propose the delta-Davidson method to solve both the extreme and interior eigenvalue problems. The method hybridizes the advantages of the delta filtering and the subspace diagonalization, which efficiently constructs the special subspace and solves the highly near-degenerate problem. We present an explicit algorithm and the specific details to apply the DELDAV method to quantum spin models,including a strongly long-range interacting system. The numerical experiments for the Ising model and the spin glass shards confirm the exactness,efficiency,and robustness of the DELDAV method. Although all three methods share roughly the same scaling behavior for extreme eigenvalue calculations,the DELDAV method has an obvious advantage over the Chebyshev-Davidson method and Arnoldi method with its capability for finding the interior eigenstates. As for the central eigenstates, the DELDAV method shows a potentially faster convergence rate for a large enough system and consumes far less memory,compared to the shift-invert method. For the interior regions with small density of the states, the DELDAV method may converge several ten times faster. The small requirement of memory makes it possible to simultaneously run several threads to compute different disorder settings. These features render the DELDAV method a competitive instrument for highly-excited-eigenpairs calculations,which are essential in many physical problems such as many body localization in condensed matter physics,[28,58,59]level statistics and scarring in quantum chaos,[23,60]entropy and partition function calculation in many-body quantum statistics,[3]and correlation function and entanglement entropy in quantum computing and quantum information processing.[1,61]

After the submission of this work, we noticed a recent paper[58]by Sierant,Lewenstein,and Zakrzewski,where an essentially similar method the polynomially filtered exact diagonalization was developed to investigate the manybody localization transition in 1D interacting quantum spin-1/2 chains.

Appendix A:DELDAV vs. delta filtering

To illustrate the advantages of the DELDAV method in dealing with the near-degenerate problem,we plot in Fig.A1 the convergence processes in finding a single eigenvalue at the largest DOS region for both the DELDAV method and the delta filtering technique. The specific eigenvalue to compute is the closest one to λ. The tests are performed for the two 15-spin systems,the Ising model with λ =10−4and the spin glass shards with λ =0. The level spacings of the rescaled operator G for both systems are about 10−5. For both methods the initial states are random. For the DELDAV method,the subspace dimension d=50 and the cutoff order K=5000,i.e.,the delta filter is δ5000(H −λ)in each iteration;while for the delta filtering technique the expansion order k keeps growing and we record the residual norm||r||when k is increased by 5000.

Fig.A1. Comparison of the convergence processes for the DELDAV method (top axis, red lines) and the delta filtering (bottom axis, blue lines) in computing a single eigenvalue at the largest DOS region for the Ising system (solid lines) and the spin glass shards (dashed lines).Both systems are of size N =15. Clearly, the DELDAV method converges faster.

The convergence lines for the two methods clearly show a different convergence rate. Although the DELDAV method presents a rather slow convergence during the plateau stage,it quickly speeds up to a fast convergence rate after accumulation of the good base states. On the contrary,the delta filtering technique alone keeps the slow convergence for a rather long time. The advantage of the DELDAV method over the delta filtering technique is thus confirmed.

Acknowledgment

We thank A.Q.Shi for discussions.

- Chinese Physics B的其它文章

- Transport property of inhomogeneous strained graphene∗

- Beam steering characteristics in high-power quantum-cascade lasers emitting at ~4.6µm∗

- Multi-scale molecular dynamics simulations and applications on mechanosensitive proteins of integrins∗

- Enhanced spin-orbit torque efficiency in Pt100−xNix alloy based magnetic bilayer∗

- Soliton interactions and asymptotic state analysis in a discrete nonlocal nonlinear self-dual network equation of reverse-space type∗

- Discontinuous event-trigger scheme for global stabilization of state-dependent switching neural networks with communication delay∗