Decoding degeneration: the implementation of machine learning for clinical detection of neurodegenerative disorders

Fariha Khaliq,Jane Oberhauser,Debia Wakhloo,†,Sameehan Mahajani,*,

Abstract Machine learning represents a growing subfield of artificial intelligence with much promise in the diagnosis,treatment,and tracking of complex conditions,including neurodegenerative disorders such as Alzheimer’s and Parkinson’s diseases.While no definitive methods of diagnosis or treatment exist for either disease,researchers have implemented machine learning algorithms with neuroimaging and motion-tracking technology to analyze pathologically relevant symptoms and biomarkers.Deep learning algorithms such as neural networks and complex combined architectures have proven capable of tracking disease-linked changes in brain structure and physiology as well as patient motor and cognitive symptoms and responses to treatment.However,such techniques require further development aimed at improving transparency,adaptability,and reproducibility.In this review,we provide an overview of existing neuroimaging technologies and supervised and unsupervised machine learning techniques with their current applications in the context of Alzheimer’s and Parkinson’s diseases.

Key Words: Alzheimer’s disease;clinical detection;deep learning;machine learning;neurodegenerative disorders;neuroimaging;Parkinson’s disease

From the Contents

Introduction 1235

Search Strategy 1236

Neuroimaging 1236

Machine Learning Approaches 1236

Deep Learning Applications 1238

Evaluation of Classifier Performance 1238

Machine Learning Classifiers for Alzheimer’s Disease 1238

Machine Learning Classifiers for Parkinson’s Disease 1239

Conclusion and Outlook 1241

Introduction

Healthcare represents one of the most prolific fields for the development and deployment of artificial intelligence (AI) technologies,medical imaging,wearable sensors,augmented and virtual reality,and more (Myszczynska et al.,2020).With today’s staggering abundance of big data,AI represents a particularly noteworthy emerging field,as it aims to automate human intelligence and emulate cognitive functions with the help of a wide range of mechanisms (Choi et al.,2020;Emmert-Streib et al.,2020).AI has already shown extraordinary promise in several areas,from the development of early diagnostic tools to the successful completion of robot-assisted surgery.However,the most recent growth and development in AI have come via advancements in machine learning (Myszczynska et al.,2020).

Machine learning (ML) is a subfield of AI that consists of algorithms targeted toward recognizing patterns and extracting noteworthy features from large datasets (Cao et al.,2018).Once these patterns are identified and learned,ML algorithms can be used to classify and predict future results.In healthcare,ML can be used on data from various sources,assisting in diagnosis as well as disease management,tracking,and outcome prediction.For instance,realtime remote monitoring by ML systems can detect disease severity,record symptoms,and register a patient’s response to treatment (Belić et al.,2019).

As with other medical disciplines,ML has been well integrated into the field of neurology,specifically concerning the computer-aided identification,monitoring,and management of symptoms associated with neurodegenerative movement disorders like Parkinson’s disease (PD).In PD,the pathogenic accumulation of alpha-synuclein in Lewy bodies and Lewy neurites drives a gradual loss of dopaminergic neurons in a dark-colored midbrain region known as the substantia nigra pars compacta (de Miranda and Greenamyre,2017;Mahajani et al.,2019;Raina et al.,2020;Psol et al.,2021;Garg et al.,2022).This loss of dopaminergic neurons occurs long before the clinical characteristics of PD manifest and contributes to a variety of motor and non-motor symptoms (Giacomini et al.,2015;Marotta et al.,2016;Mahajani et al.,2021;Raina et al.,2021).Motor symptoms of PD range from rigidity and bradykinesia,or slow,impaired movement to resting tremors and postural instability.Other,non-motor symptoms of PD include constipation,olfactory dysfunction,disturbed sleep,cognitive and behavioral changes,and depression (de Miranda and Greenamyre,2017;Kouli et al.,2018;MacMahon Copas et al.,2021).Yet,despite being the second most prevalent neurogenerative disorder today,currently no definitive method exists for the antemortem diagnosis of PD and no viable therapeutic strategy for disease treatment (DeMaagd and Phillip,2015;de Miranda et al.,2017).

However,ML algorithms coupled with wearable devices have been used to address some of the challenges associated with PD.For instance,ML has been used to differentiate between PD and other disorders that present themselves similarly and to track and manage PD progression.ML-integrated tools possess great potential in clinical practice due to their increased accuracy,reliability,accessibility,and efficiency in clinical decision-making (Myszczynska et al.,2020).

As such,ML has also been used to track disease progression and to provide a source of differential diagnosis in the context of Alzheimer’s disease (AD).The most common form of dementia,AD is characterized by the abnormal accumulation of two proteins: amyloid-beta (Aβ) and tau.Misfolded amyloid precursor protein aggregates to form extracellular Aβ plaques,and hyper-phosphorylated tau forms intracellular neurofibrillary tangles.As neurofibrillary tangles and Aβ plaques form,synaptic degeneration,and neuronal death follow,driving a neurodegenerative progression through various brain regions (Blennow et al.,2006;eTure and Dickson,2019;Soria Lopez et al.,2019;Wakhloo et al.,2022).

AD pathogenesis can be divided into stages depending on how far this neurodegeneration progresses and where neurofibrillary tangles and Aβ plaques are present.Early phase AD brains bear neurodegenerative features in the trans-entorhinal cortex.These features spread to the limbic regions in mid-phase AD brains and eventually to the iso-cortical regions in the late phases of the disease (Braak and Braak,1991;Otero-Garcia et al.,2022).The symptoms accompanying this gradual neurodegenerative spread include the progressive impairment of cognitive functions,specifically speech,recognition,episodic memory,decision making,and orientation (Colombi et al.,2013;Cortelli et al.,2015;Mahajani et al.,2017;Wakhloo et al.,2020).As AD progresses,patients also sometimes suffer from apraxia,or the inability to perform learned movements despite possessing the desire,understanding,and physical ability required to do so (Blennow et al.,2006).Like PD,there exists neither a conclusive method for the diagnosis of AD nor an effective therapy capable of treating more than the symptoms of the disease (Briejyeh and Karaman,2020).

Most prominent clinical symptoms develop after the brain has taken significant damage.Physicians are unable to diagnose these diseases before irreversible damage has been done,due to the lack of efficient diagnostic tools.It is,therefore,imperative to develop non-invasive methods of disease detection.Currently,neuroimaging can assist clinicians in staging and screening for specific identification of diseases.The use of AI,with ML and deep learning (DL) algorithms could assist clinicians in preclinical diagnosis of such diseases.To this extent,the combination of ML algorithms and neuroimaging techniques has granted researcher insights that may lead to a method for early AD diagnosis.For instance,recent studies have used ML to differentiate between the brains of patients with AD and those with mild cognitive impairment (MCI),the precursor to AD (Li et al.,2012;Suk et al.,2016;Saboo et al.,2022).Results of these studies allow researchers to identify biomarkers capable of predicting disease trajectory (Li et al.,2012;Suk et al.,2016) and of explaining individual vulnerability or resilience to cognitive decline (Saboo et al.,2022).Most importantly,the methods used in ML/neuroimaging studies provide a roadmap for the future identification of cognitively vulnerable individuals and the development of new therapeutic interventions (Saboo et al.,2022).In this review,we aim to outline the ML/DL modeling tools available to researchers and highlight certain use case scenarios.

Search Strategy

The references cited in this review have been obtained from the following databases: PubMed,Google Scholar,and Science Direct.We referenced fulltext review articles,randomized control trials,meta-analyses,and textbooks.No limits were used.

Neuroimaging

Today’s neuroimaging technologies have proven capable of illustrating the brain’s anatomy with a resolution comparable to that achieved with high-quality images taken of thin tissue slicesin vitro(Shen et al.,2017).Technological advancements in medical image processing have led to its widespread use and contributed to the development of new areas of exploration for the prediction and future diagnosis of neurodegenerative diseases (Noor et al.,2019).We discuss the neuroimaging techniques capable of identifying functional and anatomical changes related to neurodegeneration below.

Magnetic resonance imaging

Magnetic resonance imaging (MRI) uses a set of powerful magnets to generate magnetic waves capable of forming two or three-dimensional images of the brain without the need for radioactive tracers.MRI enables the imaging and evaluation of functional neural activity in the cortical regions.The images generated by MRI allow clinicians and scientists to study both functional and structural abnormalities of the brain in neurogenerative diseases (Ahmed et al.,2019).

Functional magnetic resonance imaging

Functional MRI (fMRI) determines the small changes that occur in blood flow with certain brain activity.It is used to determine the part of the brain responsible for critical functions,assess the effect of stroke,or to guide brain treatments (Dijkhuizen et al.,2012).Two most common types of fMRI are Quantitative Susceptibility Mapping and Diffusion Tensor Imaging.Quantitative Susceptibility Mapping detects the difference in magnetic susceptibility between healthy and diseased tissues,whereas Diffusion Tensor Imaging exploits the sensitivity of magnetic resonance signal into water molecules with small random motion (Liu et al.,2015;Ruetten et al.,2019).Quantitative Susceptibility Mapping is generally used to quantitatively assesstissue properties,whereas Diffusion Tensor Imaging studies the reorganization of the brain in different stroke models.

Arterial spin labeling

Arterial spin labeling (ASL) is one of the most widely used MRI techniques in clinical diagnosis.This non-invasive method can measure brain perfusion,providing a reliable technique to evaluate the cerebral blood flow of an individual suspected to have a neurodegenerative disorder (Arevalo-Rodriguez et al.,2021).ASL-MRI is also used to augment routine diagnostic procedures by providing a source of data for differential diagnosis,particularly in the differentiation between AD and frontotemporal dementia (Mas,2018).3D pseudo-continuous ASL-MRI data and tissue segmentation methods of the entire supratentorial cortex and ten gray matter regions are used to quantify cerebral blood flow and gray matter volume (Arevalo-Rodriguez et al.,2021).ASL-MRI is generally complemented with other cognitive examinations and/or questionnaires (De Vis et al.,2018).For instance,to distinguish patients suffering from AD from patients with frontotemporal dementia and controls,the Mini-Mental State Examination is used.Mini-Mental State Examination is a 30-question quiz developed by Folstein and McHugh in 1975 to help clinicians grade the cognitive state of patients (Upton,2020).The quiz assesses attention,orientation,memory,registration,recall,calculation,language,and the ability to draw a complex polygon (Mas,2018).

Positron emission tomography

Positron emission tomography (PET) measures radiation to generate two or three-dimensional images that record the circulation of bloodborne radiotracers throughout the brain (Fleisher et al.,2020).The primary benefit of PET is that it provides a visualization of brain activity and function,illustrating blood flow,oxygen level,and glucose metabolism in functional brain tissues (Bao et al.,2021).Combining PET scans with structural MRI could significantly improve the accuracy of neurological disease identification.The images obtained after using the radiotracer fluorodeoxyglucose and PET have been optimized to illustrate patterns characteristic of neurodegenerative diseases (Fleisher et al.,2020;Bao et al.,2021).Fluorodeoxyglucose-PET plays a crucial role in the early detection and monitoring of AD,illustrating pathophysiological changes in patient brains (Fleisher et al.,2020;Bao et al.,2021;Ni et al.,2021).

Single-photon emission computed tomography

Single-photon emission computed tomography (SPECT) is a functional nuclear imaging technique reliant upon radioactive tracers,or SPECT agents.SPECT is primarily used for the evaluation of regional cerebral blood flow.Regional cerebral blood flow is a measure of the rate of delivery of arterial blood to the capillary bed in brain tissues per unit time.Its output comes in the form of two-or three-dimensional images.SPECT has previously assisted in distinguishing between AD and white matter vascular dementia cases by analyzing the semi-quantitative circumferential profile (Ahmed et al.,2019).

Machine Learning Approaches

ML algorithms facilitate the clinical decision-making process by automatically classifying and predicting disease progression using computer-aided diagnosis,rather than the hands-on interpretation by medical experts (Shen et al.,2017;Myszczynska et al.,2020).ML models are trained by multiple techniques,including transfer learning using pre-trained weights,ensemble model construction,and new model development.Training data can be retrieved from multiple open source platforms such as Kaggle,IEEEDataPort,and Grand Challenge as well as from specialized neuro data repositories,such as NeuroVault,Whole Brain Atlas,Temple EEG database,SchizConnect,The Pain Repository,Open Access Series of Imaging Studies (OASIS),Glucose Imaging in Parkinsonian Syndromes Project (GLIMPS),Brain-CODE,Alzheimer’s Disease Neuroimaging Initiative (ADNI),OpenNeuro,and Collaborative Research in Computational Neuroscience (CRCNS).

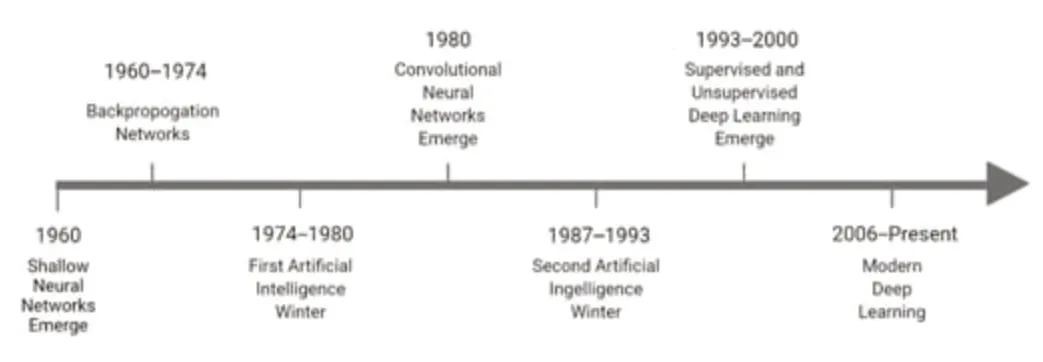

Deep learning models

Deep learning (DL) is an emerging soft computing technique in ML that often relies on layered algorithmic structures known as neural networks (Ahmed et al.,2019;Myszczynska et al.,2020).A DL architecture is referred to as a “hybrid model” when combined with a traditional ML architecture,such as a support vector machine (SVM),as a classifier.DL and neural networks have been implemented in translational studies ranging in focus from sequence binding(Alipanahi et al.,2015;Trabelsi et al.,2019) to structural and image analysis(Shen et al.,2017;Saboo et al.,2022).Much ongoing research is focused on adapting neural network structures for disease detection and treatment analysis applications (Ahmed et al.,2019;Figure 1).The ML mechanisms involved in DL techniques are optimized via a model training process,where the computer is provided with a dataset (input) and an associated collection of outputs (Gautam and Sharma,2020).The job of the ML model is to adapt its algorithm to fit an identified relationship between the two sets of information (Choi et al.,2020).This learning process falls into one of four categories,depending on the amount of labeling applied to a training dataset.In supervised learning models,entirely labeled training data provide an answer key that a model can use to evaluate and inform its accuracy (Segovia et al.,2018).In contrast,unsupervised models draw from entirely unlabeled training data and must rely on independently drawn conclusions and extracted features.In between these two categories are semi-supervised and reinforcement learning (Segovia et al.,2018).Semisupervised models use both labeled and unlabeled training data,while reinforcement learning models train using an optimization-focused reward system (Kang and Jameson,2018;Choi et al.,2020).This review will focus on supervised and unsupervised approaches,as reinforcement learning is currently not well-suited for medical analyses (Choi et al.,2020).

Figure 1|The evolution of deep learning techniques over time.

Supervised models

Algorithms that can identify patterns and generate hypotheses by using externally provided information to predict future outcomes are known as supervised ML algorithms (Choi et al.,2020).Supervised models are primarily used for data classification,regression,and to predict desired outcomes.Some of the common techniques proposed to solve problems using supervised ML algorithms include rule-based techniques,logic-based techniques,instance-based techniques,and stochastic techniques (Singh et al.,2016).Several commonly used supervised learning methods are described below.

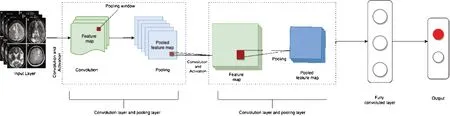

Between the input and output,DL neural networks consist of multiple hidden layers responsible for data processing and computation.Hidden layers consist of a convolutional layer,a pooling layer,and a fully connected layer with an activation function.The convolutional layer is the main building block of a neural network.It consists of several filters,or kernels,whose parameters are learned throughout model training (Zhao et al.,2021).Examples of well-characterized and widely used DL models include convolutional neural networks (CNN),deep neural networks (DNN),and recurrent neural networks(RNN) (Uddin et al.,2019).

Built to imitate the alternating layers of cells present in the visual cortex of the human brain,CNN are composed of three layers: the convolutional layer,the pooling layer,and the fully connected layer.CNN implement a one-way model,in which information is transmitted from the input layer to the output layer only (Zhao et al.,2021).This feed-forward network structure can be implemented in both a supervised and an unsupervised manner (Rovini et al.,2018).CNN represent the most widely used DL approach for biomedical image analysis (Cao et al.,2018) and are designed to handle multiple array data,such as signals data two-and three-dimensional images.Some of the most widely used CNN include Alxenet,Lenet,R-CNN,Zfnet,GoogleNet,and ResNet (Zhao et al.,2021).

DNN are composed of multiple layers for transformation and use artificial neural networks as distinct processing layers.RNN are neural networks capable of forming internal memory,rendering them optimal for the analysis of sequential data (Rovini et al.,2018;Uddin et al.,2019).Bayesian networks are a statistical model used to represent the probabilistic relationship between a set of variables.Another statistical model is the logistic regression model,which deploys an online gradient descent method for improved probabilistic interpretation.This model is mainly applied when the dependent variables are dichotomous (Singh et al.,2016).

Decision trees are designed to deal with inseparable information and other data that include nominal,numeric,textual,missing,or redundant values.The random forest method utilizes an ensemble of decision trees.It is robust to noise,scalable,and does not overfit (Singh et al.,2016;Uddin et al.,2019).As described previously,neural networks are constructed to emulate the neural structures,learning abilities,and processing methods of the human brain.These models are deployed to solve non-linear problems (Uddin et al.,2019).

SVM is a complex supervised algorithm currently considered one of the best ML algorithms (Uddin et al.,2019).SVM models are deployed when data are not linearly separable to prevent overfitting.In contrast,k-nearest neighbor(k-NN) models are non-parametric classification algorithms that assign unlabeled sample points to the class of the nearest previously labeled sample point (Singh et al.,2016).

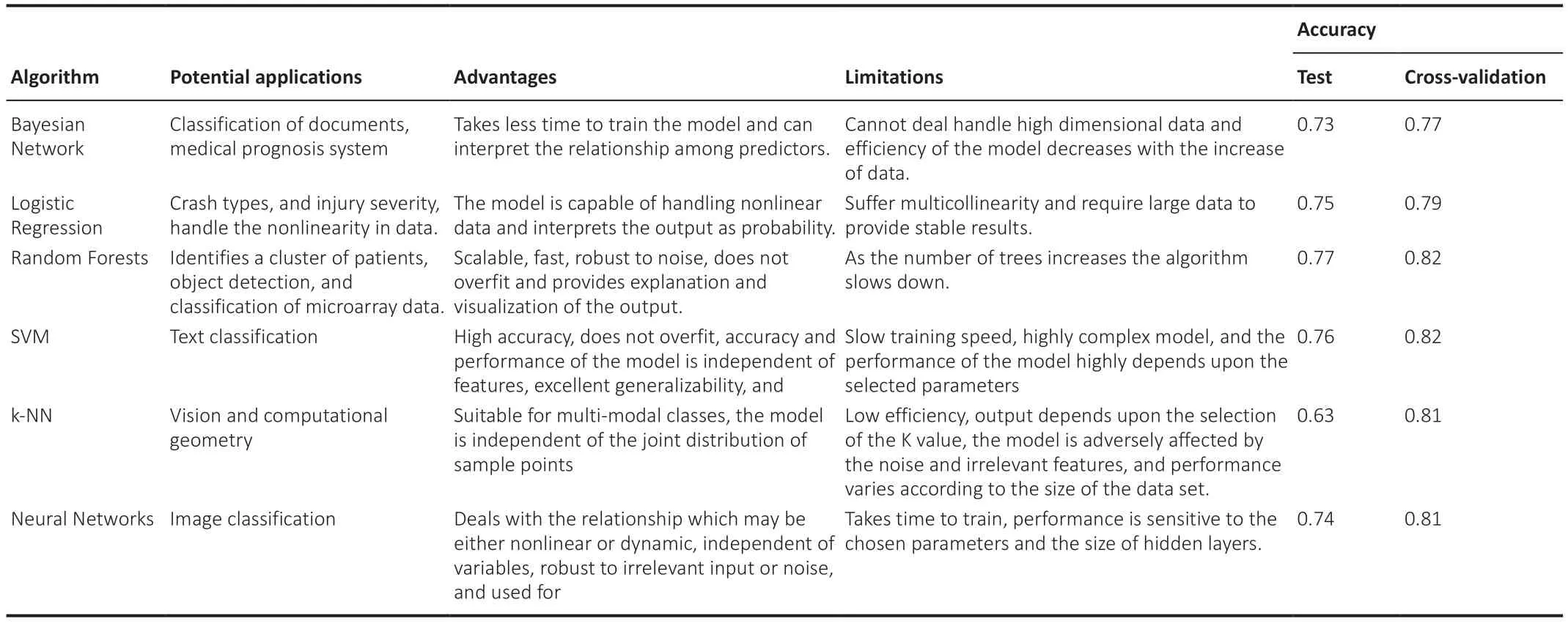

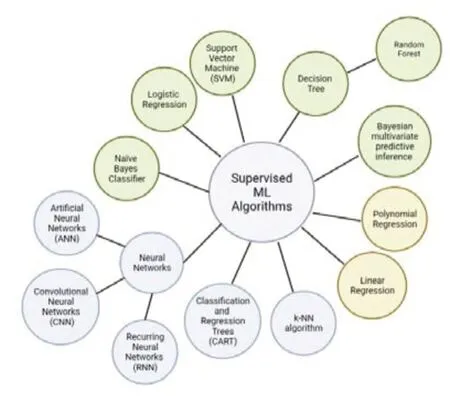

AsTable 1illustrates,all complex models underperform due to poor parameter choice.However,SVM and k-NN have outperformed all supervised ML algorithms as described inTable 1.SVM and k-NN are considered one of the best-supervised machine learning algorithms since SVM is robust in comparison to linear regression,handles multiple features,does not overfit,and performs very well in classifying semi-structured and unstructured data such as text and images.Whereas k-NN is a simple algorithm that can classify subjects quickly,is capable of handling noise and missing values,and is mainly used to solve regression and classification problems (Uddin et al.,2019).Treebased algorithms have historically performed better than other algorithms.Figure 2exhibits the different supervised algorithms in use and their modes of implementation.

Table 1|The potential applications,advantages,limitations,and varied accuracies of commonly used supervised learning algorithms

Figure 2|A relationship map of currently used supervised learning models.

Unsupervised models

Unlike supervised algorithms,unsupervised algorithms learn without an answer key,supervisor,or source of external information to fall back on (Kang and Jameson,2018;Choi et al.,2020).Figure 3demonstrates the general architecture of unsupervised neural networks.Unsupervised neural networks and other algorithms are mainly used for clustering and feature reduction(Noor et al.,2020).

Figure 3|A representation of the basic architecture of an unsupervised neural network.

Unsupervised models are also known as self-supervised algorithms.Autoencoders (AE),which are used for compression and other functionalities,represent a unique form of self-supervised learning (Singh et al.,2015).AE are deployed to implement a feedforward approach to generating output from an input (Singh et al.,2015).In this feedforward approach,multiple inputs enter a layer and are multiplied by their respective weights.The generated outputs are then added together to obtain a sum of all weighted input values (Lu et al.,2020).

Deep autoencoders are unsupervised models with a stacked structure composed of three layers: the input (encoder),the output (decoder),and the hidden layer (code).Sparse AE are another commonly used unsupervised ML model that relies on an axisymmetric single hidden layer for feature extraction.Stacked AE are used for the prediction of disease using timefrequency features of speech signals.Stacked autoencoders are made up of three layers: an input layer,hidden layers (which generate learned features),and an output layer in the same dimension as the input layer,demonstrating the reconstruction of the inputs (Li et al.,2019).Beyond the scope of artificial neural networks,other generative learning methods have also been implemented to solve complex problems.Generative learning methods include models like the deep belief network (Zhao et al.,2021).Developed in 2006,the deep belief network is an unsupervised model composed of complex layers of the restricted Boltzmann machine algorithm with pre-trained weights.This unsupervised generative neural network is comprised of two layers: visible and hidden.Generative adversarial networks represent another generative learning technique structured based on imageto-image translation.As a result,this class of model is also known as pix2pix(Zhao et al.,2021).Other common unsupervised ML algorithms used in the detection of neurodegenerative disorders include K-means and self-organizing maps,described in more detail below.

K-means:One of the simplest unsupervised ML algorithms,the K-means method represents a popular clustering algorithm used to divide a given dataset between a pre-determined number of clusters (k) based on recognized similarities and dissimilarities between data points (Raval and Jani,2016;Ahmed et al.,2020).The model operates by randomly selecting data points to represent each cluster.These data points,known as centroids,center and define the characteristics of each cluster throughout the sorting process (Ahmed et al.,2020).However,using the k-means model presents several difficulties.For instance,varying k-value selection leads to varying convergence results (Ahmed et al.,2020).Similarly,different randomized centroid placements can generate different results (Das et al.,2017).To optimize the k-center placement,researchers attempt to place them as far as possible from one another (Das et al.,2017).

Self-organizing maps (SOM):SOM are considered one of the most efficient unsupervised neural network techniques.The basic architecture of SOM is based on competitive learning.SOM are primarily used for the cluster analysis of high-dimensional data.This technique aims to reduce the dimensionality of complex data,organizing a simplified representation of each datapoint spatially within a two-dimensional map (Sarmiento et al.,2017).Datapoints on the map are known as neurons.These neurons build a two-dimensional lattice that acts as the output layer of the SOM,where high-dimensional input space is mapped within the plane.The completed map acts as a spectrum,where the discrete features of each simplified datapoint resemble its immediate neighbor (Sarmiento et al.,2017).

Deep Learning Applications

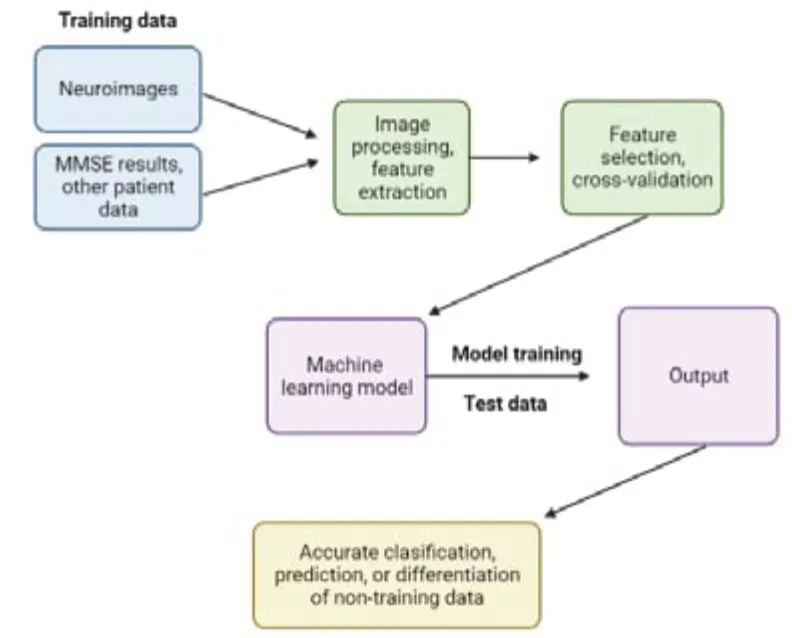

The four essential steps for image processing include acquiring an existing dataset,analyzing,and identifying new patterns in the data.This is followed by preprocessing the raw data and making predictions.Based on the accuracy of the model,it can be then trained on other new datasets (Figure 4).

However,the primary limitation of DL applications for the analysis of biomedical images remains the availability of only a small sample of training data in a space where additional images are not easily obtainable (Shen et al.,2017).The more complex the DL model,the more parameters it must internalize (Ying,2019).Therefore,to construct and train a sufficiently sophisticated DL algorithm without overfitting the model,researchers must find creative ways to expand their dataset (Shen et al.,2017).To accomplish this,researchers use a range of different techniques.Data augmentation,for example,involves the artificial inflation of the existing training dataset by transforming existing images (Shorten and Khoshgoftaar,2019).Dimensionality reduction can also be used to reduce the existing number of model parameters,or models can be pre-trained with external data (Shen et al.,2017).

Evaluation of Classifier Performance

Evaluation of classifier performance is done in terms of sensitivity or recall.Eq (1) is used to calculate the percentage of cases correctly identified as true,or specificity.Eq (2) calculates the percentage of cases correctly identified as false,or precision.Eq (3) is employed to determine the percentage of cases correctly identified as true about all diagnosed as true,otherwise labeled accuracy (Uddin et al.,2019).Eq (4) determines the percentage of cases that are correctly identified with respect to all subjects,and F-score Eq(5) calculates a weighted average of specificity and sensitivity.To obtain all the above measurement values,True Positive (TP),False Positive (FP),True Negative (TP),and False Negative (FN) are calculated (Uddin et al.,2019).

Eq (1) Recall=TP/(TP+FN)

Eq (2) Specificity=TN/(TN+FP)

Eq (3) Precision=TP/(TP+FP)

Eq (4) Accuracy=(TP+TN)/(TP+TN+FP+FN)

Eq (5) F-score= 2 × (Precision × Recall)/(Precision+Recall)

Machine Learning Classifiers for Alzheimer’s Disease

Currently,some of the most common DL techniques used for clinical AD prognosis include DNN,restricted Boltzmann machine algorithm,DBM,deep belief network,AE,Sparse AE,and Stacked AE (Altinkaya et al.,2020).Each architecture has been developed to distinguish between multi-modal neuroimaging data from cognitively normal controls and brains with MCI,commonly known as the prodromal or pre-symptomatic phase of AD.Once trained,these DL models can be used to predict the conversion of MCI to AD(Bringas et al.,2019;Altinkaya et al.,2020).AD research using DL algorithms is still evolving to achieve higher accuracy,as summarized inTable 2.

Table 2|ML algorithms developed for the classification of AD,CN,and MCI over the past ten years and their accuracies

Data mining identifies patterns and relationships,classifies complex data,and extracts useful information from the recorded data.It is a common technique used on K-means datasets for the automatic classification of normal control individuals,MCI,and AD (Nabeel et al.,2021).Extracting information from real cases helps in the early clinical detection of AD.A recent study by Uddin et al.(2019) demonstrates the comparison of supervised ML architectures for AD classification.The classification was based on various performance metrics,including accuracy,precision,recall,and F1 score,to determine the efficiency of each classifying architecture.The study’s results demonstrated that the bagging method,which combines several learning models in parallel,outperformed all other ML architectures.Other studies have identified XGBoost as the most powerful architecture for the classification of MCI subjects(Gautam and Sharma,2020;Noor et al.,2020).

Additional research coupled with AI-integrated wearable sensors has identified CERAD change scores as a valuable tool for the early detection of MCI (Gautam and Sharma,2020;Noor et al.,2020).These studies suggest that these architectures and sensors can be implemented in a clinical setting as a diagnostic tool (Taeho et al.,2019).Integrating data mining and ML algorithms seems to promise more accurate disease predictions and classifications of AD and other forms of dementia (Uddin et al.,2019).

Machine Learning Classifiers for Parkinson’s Disease

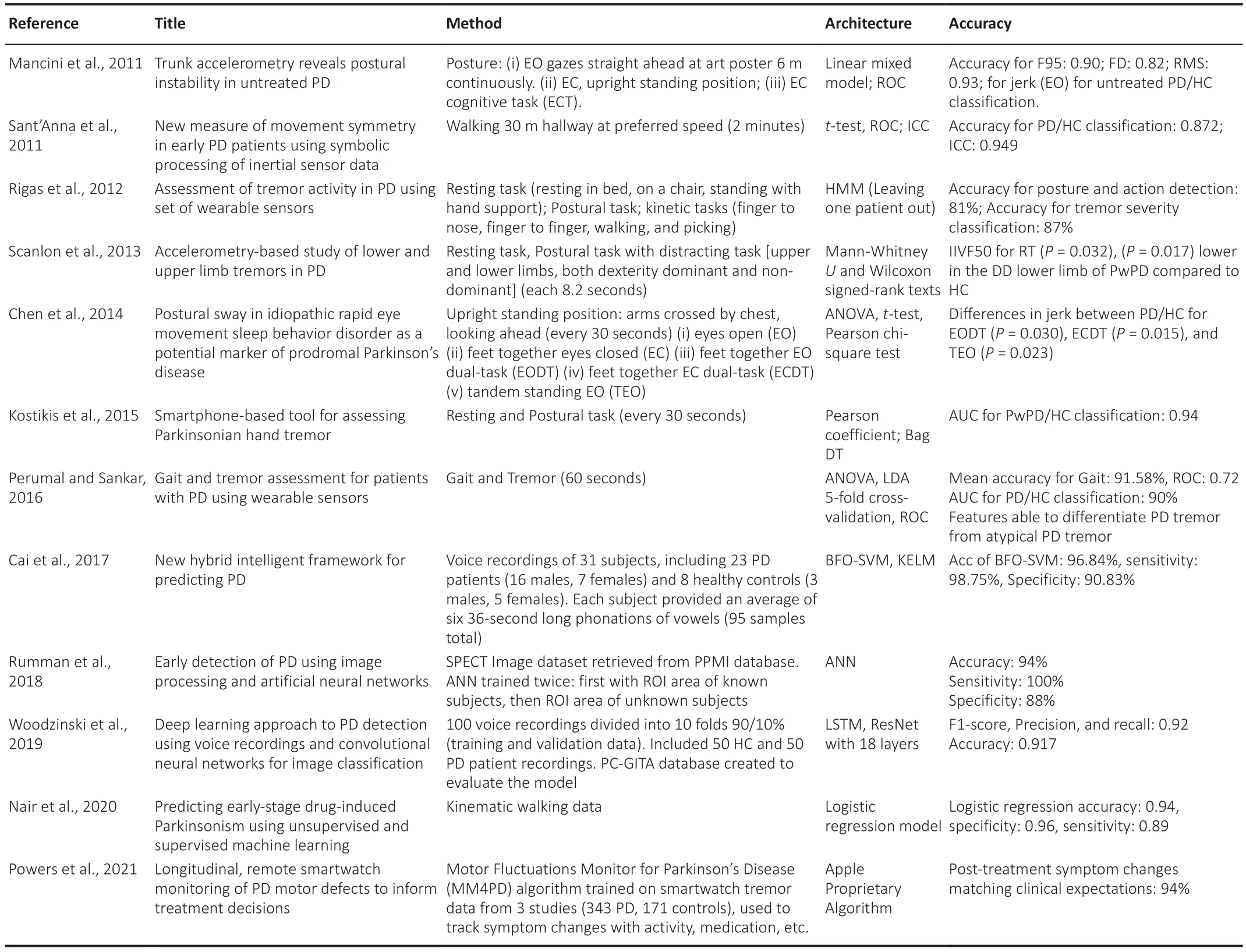

ML analysis of simple drawing tasks and handwriting is widely used in the early detection of PD.Several supervised ML algorithms are also increasingly utilized for PD symptom tracking.Figure 5illustrates a general workflow for the supervised ML algorithms implemented in clinical practice for the identification of PD using motor symptoms (Rovini et al.,2018;Hoq et al.,2021).

Figure 4|An overview of the step-by-step process by which machine learning and computer-aided diagnosis techniques process and analyze clinical and neuroimaging data to identify features associated with neurodegenerative diseases.

To identify irregular motor characteristics implicated in PD and to differentiate between abnormal and normal hand movements,researchers implement classifier-based supervised ML algorithms on data derived from patients given horizontal drawing tasks (Hoq et al.,2021).Naïve Bayes classification has been combined with a set of metrics related to the velocity and spatiotemporal tracing of the subject’s pen to accurately differentiate between PD patients and normal control participants.Similarly,Archimedean spiral tracing performed by individuals with PD can undergo automatic analysis by a supervised model (Rovini et al.,2018).The results of algorithmscored drawings display striking similarities to drawings independently scored by clinical experts (Nair et al.,2020).These tests are performed on patients’personal computers using the relevant supporting software.Therefore,since these tests can be performed via smartphones and tablets,they represent an accessible and cost-effective approach to the analysis of motor function(Woodzinski et al.,2019).

Two common ML-based hardware systems used in clinical practice for the identification of PD are Parkinson’s KinetiGraph and the Kinesia system (Power et al.,2019).These systems are designed specifically for the identification of dyskinesia and bradykinesia in individuals suffering from PD.The KinetiGraph system is worn on the wrist to measure wrist acceleration (Cancela et al.,2016),whereas Kinesia is worn on either a finger or wrist and detects motion via a built-in accelerometer and gyroscope (Belić et al.,2019).

These system outputs are analyzed using supervised ML algorithms,such as SVM.For instance,Kinesia recordings have been used to classify PD tremor severity.Gait analysis algorithms have also shown promising results in the early identification of motor disorders (Heldman et al.,2017).For instance,RNN and long short-term memory have been used to classify gait analysis recordings,drawing from a database that includes measures of stride-tostride footfall times.These algorithms have proven capable of differentiating between healthy controls and individuals suffering from PD,Huntington’s disease,and motor neuron disease,with accuracies ranging from 95% to 100%.However,in SVM,an ensemble of classifiers performs better than a single classifier.Existing algorithms can also be improved to optimize performance (Heldman et al.,2017;Belić et al.,2019).

Bayesian multivariate predictive inference platforms have also been applied to clinical data to study PD progression.Latourelle and colleagues published a study in which they trained a model for assessments of motor progression and of the complete molecular and genetic information obtained from a group of 117 healthy controls and 312 participants with PD over two years.To identify novel predictors of motor progression in the early stages of PD,a total of 17,499 features were included in the model.Progression modeling identified common factors for faster motor regression,including higher baseline motor scores,old age,and male sex.However,it also identified new predictors such as genetic variation and biomarkers found in patients’cerebrospinal fluid (Latourelle et al.,2017).

As shown in Table 3,the SVM ML classifier appears to perform best for the differentiation of PD patients from control subjects (Cai et al.,2017).SVM algorithms report the highest accuracy scores in all performance measures except for precision and F-score,in which they rank second.In the existing literature,authors have also compared the accuracy of regression models for tracking the development of PD using metrics such as the measured error between paired predictions,the mean squared error,and the coefficient of correlation (Nair et al.,2020).Studies have also reported that least square SVM outperforms both multilayer perceptron neural networks and general regression neural networks in the differentiation between healthy and PD patients (Cai et al.,2017).At this point,SVM remains the most suitable method for modeling vocal features for PD prognosis and monitoring (Perumal and Sankar,2016).

Table 3|ML algorithms developed for the classification of PD over the past ten years and their accuracies

Conclusion and Outlook

To efficiently implement machine learning and data mining techniques for the clinical detection of the neurodegenerative disorders,more training data should be made available.More patient information such as postmortem data needs to be present to avoid a high error rate.However,a semi-supervised algorithm must be implemented using a clustering approach for high accuracy results.Literature also reveals that while implementing ML algorithms especially neural networks on different neuroimages such as MRI,ASL,PET,and SPECT images must be combined with SVM and k-NN for improved results since SVM is robust to linear regressions and is best at differentiating PD from control subjects whereas k-NN is a simple algorithm,classifies subjects quickly and handles noise along with unlabeled data.However,for the classification of AD patients in the clinical setting 3D CNN models should be implemented since 3D models provide promising results.Moreover,recent research also reveals that Kohonen unsupervised self-organizing map and least-squares support vector machine when performed on Structural MRI could detect structural changes of early PD.Singh and colleagues implemented this technique on a large dataset which validates the robustness and value of the technique (Singh et al.,2018).In summary,using combined neuroimaging,multi-modal,and clinical data could further enhance the diagnosis and early detection of neurodegenerative disorders.To implement machine learning in clinical settings for diagnosis and other applications,further validation and optimization are required to make it reliable and accurate.Despite the challenges in deciphering machine learning into clinical settings,it can assist clinicians in improving differential diagnosis of Parkinsonism,AD patients and early detection of neurodegenerative disorders,which can drastically reduce the error rate and help in diagnosing PD at a pre-motor stage so that early treatment is started to slow down the progression of neurodegenerative diseases like Parkinson’s and Alzheimer’s disease.

Researchers intend to develop DL classification models based on time series to learn patients’ temporal patterns.Even though great success has been achieved in the diagnosis of brain disorders using functional MRI (fMRI)images,these successes remain far from providing an effective clinical diagnosis (Yin et al.,2022).To implement fMRI in a clinical setting,researchers must first develop reliable and explainable biomarkers.Future DL models coupled with neuroimaging should be capable of classifying more than one single disorder against healthy controls with high accuracy (Yin et al.,2022).Implementing complementary parameters such as electronic medical records,EEG,and structural MRI images with fMRI could help yield better results (Yin et al.,2022).

Other fusion methods still in development include a cross-modal representation-based method for fMRI images that show enhanced performance over traditional DL models.However,there remains a need for more training samples for multimodal fusions (Yan et al.,2022).

Another important yet relatively new field in neuroimaging is neural architecture search (NAS) techniques (Yan et al.,2022).NAS automatically selects,composes,and parameterizes DL models to achieve maximum accuracy and optimal model performance on provided fMRI or neural images(Yan et al.,2022).NAS techniques show additional promise due to their optimization of search space,search strategy,and performance estimation strategy (Yan et al.,2022).The search space is defined as potential neural architectures that can be implemented using the NAS algorithm (Yan et al.,2022).The search strategy is termed as how this search space is explored.And finally,the performance estimation strategy references the performance evaluation parameters that evaluate NAS algorithm performance on various training datasets (Yan et al.,2022).

Scientists are working on developing wearable sensors with embedded digital signal readout software for the early diagnosis and symptom monitoring of neurodegenerative diseases (Asci et al.,2022).For instance,ongoing research seeks to develop electrochemical/biocatalytic sensors for the detection of L-Dopa,a dopaminergic precursor molecule,in patients at risk for PD.These wearable,minimally invasive sensors allow researchers and clinicians to correlate plasma levels of L-Dopa and the severity of motor symptoms,tailoring the treatment accordingly (Asci et al.,2022).Small inertial sensors such as tri-axial accelerometers and gyroscopes can be placed on different body parts to examine patient motor activity (Mughal et al.,2022).Capable of recording 3D kinematic and spatial-temporal data related to the body’s spatial orientation and motion (Avalle et al.,2021),these sensors can be integrated with random forest algorithms for signal pattern recognition and classification(Asci et al.,2022).Such classifications could be further integrated into clinical settings to objectively analyze motor symptoms and quantify PD severity and progression (Mughal et al.,2022).

In contrast,infrared ambient sensors can be placed in the home as well as in smartphones,tablets,or wristwatches to monitor behavioral symptoms of AD(Gillani and Arslan,2021).These sensors monitor signals related to patients’interest in and interaction with their environment,providing information that is processed using ML algorithms to predict the patient’s declining cognitive functionality (Perumal and Sankar,2016).This feature provides continuous feedback helping caregivers and health professionals by providing autonomous patient support and monitoring disease progression (Gillani and Arslan,2021).

Other wearable sensors such as Neuroglass are also being developed for the early detection of neurodegenerative disorders (Asci et al.,2022).Neuroglass seeks to integrate sensors capable of tracking head movement,velocity,acceleration,blood pressure,body temperature,blood oxygenation,electroencephalography,electro-oculography,and trans-cranium impedance(Avalle et al.,2021).However,Neuroglass’ primary technique measures eye motion and ocular tremor.Clinicians and scientists believe that eye and head movement quantification could represent an effective approach for the early detection of neurological disorders,drastically reducing diagnostic error(Avalle et al.,2021).

Whereas the integration of ML and wearable technology could soon emerge as a predictive tool for the early diagnosis of neurological diseases,important areas for further research still remain.Areas of investigation which require work include the detection of pre-symptomatic cognitive decline at the cellular level,improvements in GAIT and movement analysis for patients using wearable sensors,patient memory recollection using video modeling,and understanding the role of telemedicine in the treatment of neurological diseases.

Abbreviations:AD: Alzheimer’s disease;AE: auto encoders;AI: artificial intelligence;ANN: artificial neural networks;ASL: arterial spin labeling;CN:cognitively normal;CNN: convolutional neural networks;DBM: deep belief network;DL: deep learning;DNN: deep neural networks;EC: eyes closed;ECDT:EC dual-task;EO: eyes open;EODT: EO dual-task;fMRI: functional magnetic resonance imaging;HC: healthy controls;k-NN: k-nearest neighbor;MCI: mild cognitive impairment;ML: machine learning;MM4PD: motor fluctuations monitor for Parkinson’s disease;MRI: magnetic resonance imaging;NAS:neural architecture search;PD: Parkinson’s disease;PET: positron emission tomography;ResNet: residual neural networks;RNN: recurring neural networks;ROC: receiver’s operating curve;SOM: self-organizing maps;SPECT:single-photon emission computed tomography;SVM: support vector machine;TEO: tandem standing EO.

Author contributions:Conceptualization,investigation,writing-original draft,writing-reviewing and editing,supervision: FK,DW,SM;investigation,visualization,writing-original draft,writing-reviewing and editing: JO.All authors approved the final version of this manuscript.

Conflicts of interest:The authors declare no conflicts of interest.

Open access statement:This is an open access journal,and articles are distributed under the terms of the Creative Commons AttributionNonCommercial-ShareAlike 4.0 License,which allows others to remix,tweak,and build upon the work non-commercially,as long as appropriate credit is given and the new creations are licensed under the identical terms.

- 中国神经再生研究(英文版)的其它文章

- Neuro faces of beneficial T cells: essential in brain,impaired in aging and neurological diseases,and activated functionally by neurotransmitters and neuropeptides

- Profiling neuroprotective potential of trehalose in animal models of neurodegenerative diseases:a systematic review

- Cdk5 and aberrant cell cycle activation at the core of neurodegeneration

- Recent advancements in noninvasive brain modulation for individuals with autism spectrum disorder

- Vicious cycle of lipid peroxidation and iron accumulation in neurodegeneration

- Cell-based therapeutic strategies for treatment of spinocerebellar ataxias: an update