Broad Learning System for Tackling Emerging Challenges in Face Recognition

Wenjun Zhang and Wenfeng Wang

1School of Computer Science and Technology,Hainan University,Haikou,570228,China

2Shanghai Institute of Technology,Shanghai,201418,China

3Interscience Institute of Management and Technology,Bhubaneswar,752054,India

ABSTRACT Face recognition has been rapidly developed and widely used.However,there is still considerable uncertainty in the computational intelligence based on human-centric visual understanding.Emerging challenges for face recognition are resulted from information loss.This study aims to tackle these challenges with a broad learning system(BLS).We integrated two models,IR3C with BLS and IR3C with a triplet loss,to control the learning process.In our experiments,we used different strategies to generate more challenging datasets and analyzed the competitiveness,sensitivity,and practicability of the proposed two models.In the model of IR3C with BLS,the recognition rates for the four challenging strategies are all 100%.In the model of IR3C with a triplet loss,the recognition rates are 94.61%,94.61%,96.95%,96.23%,respectively.The experiment results indicate that the proposed two models can achieve a good performance in tackling the considered information loss challenges from face recognition.

KEYWORDS Computational intelligence;human-centric;visual understanding

1 Introduction

Computational intelligence in face recognition based on visible images has been rapidly developed and widely used in many practical scenarios,such as security[1–9],finance[10–19],and health care service[20–29].However,limited by lighting conditions[30–33],facial expression[34,35],pose[36,37],occlusion[38,39],and other confounding factors[40],some valuable information are easily to be lost.Dealing with information loss is an emerging challenge for face recognition,which is few addressed in the previous studies.It is emergent to find a method for generating new datasets for tackling this challenge.Multi-sensor information fusion [41–50] analyzes and processes the multi-source information collected by sensors and combines them.The combination of multi-source information can be automatically or semi-automatically carried out[51–54].In the fusion process of faces images,some valuable information for face recognition is possibly lost and in turn,can generate a more challenging dataset for us.

There are three main categories of sensor fusion-complementary,competitive,and co-operative[55].These categories make multi-sensor information fusion more reliable and informative [56,57].Such fusion has been widely used in the field of transportation [58–67],materials science [68–77],automation control systems [78,79],medicine [80–85],and agriculture [86–95].The fusion strategy aims to create images with almost negligible distortion by maintaining the quality of the image in all aspects when compared with the original or source images [96,97].Through the fusion of different types of images obtained in the same environment,the redundancy information can improve the reliability and fault tolerance of the data[98,99].Visible sensors capture the reflected light from the surface of objects to create visible images[100–103],while infrared sensors obtain thermal images[104].There are already many studies on the fusion of visible and thermal images[105–118]and such fusion has been integrated with face recognition[119–121].Thermal images have some problems such as low contrast[122,123],blurred edge[124,125],temperature-sensitive[126–128],glass rejection[129–132],and little texture details [133,134].The fusion of visible and thermal faces images will blur or hide some valuable information and therefore,can be employed to generate a challenging dataset for face recognition.

The objectives of this study are 1) to utilize information fusion technologies and generate more challenging datasets for face recognition and 2) to propose two models to tackle the challenge.Organization of the whole paper is as follows.In Section 2,we will introduce four fusion strategies(maximum-value fusion,minimum-value fusion,weighted-average fusion,PCA fusion)and complete the modelling approach.In Section 3,the proposed models will be performed on the fused challenging datasets and the model sensitivity will be evaluated by a cross validation with changes in the weights.Competitiveness and practicability of the two models are also discussed at the end the paper.

2 The Modelling Approach

2.1 Problem Formulation

In this experiment,we used the TDIRE dataset and the TDRGBE dataset of the Tufts Face database,which respectively contains 557 thermal images and 557 visible images for 112 persons[135].The 1stperson has 3 images,the 31stperson has 4 images,while each of other persons has 5 thermal images in the TDIRE dataset.Each person has the same number of visible images in the TDRGBE dataset and thermal images in the TDIRE dataset.First,we conducted face cutting on the total of 1114 images with CascadeClassifier from Opencv.Then we combined thermal and visible images of each person in the dataset to form a challenging dataset,which includes 557 fused images.Finally,we used the TDRGBE dataset as the training set and the fused dataset as the testing set to treat the problem considered in this study.

During the process of image fusion,we employ four different strategies to form a gradient of difficulty:

1) Select maximum value in fusion.The fused image is obtained by selecting the maximum value of each pixel from the corresponding position in each pair of thermal and visible images,as follows:

where A(i,j)is the thermal image and B(i,j)is the corresponding visible image.

This strategy selects the maximum value of pixels to enhance the gray value of the fused image.It enables the fused image to prefer the information from the pixel at high exposure and high temperature.So,lots of valuable information will lose after fusion and render face a challenge for face recognition.

2) Select minimum value in fusion.This is a contrary strategy with respect to 1).The strategy selects the minimum value of pixels to decrease the gray value of the fused image.It may lose lots of valuable information.In our experiment,it enables the fused image to prefer the information from the pixel,which is at low exposure and low temperature.

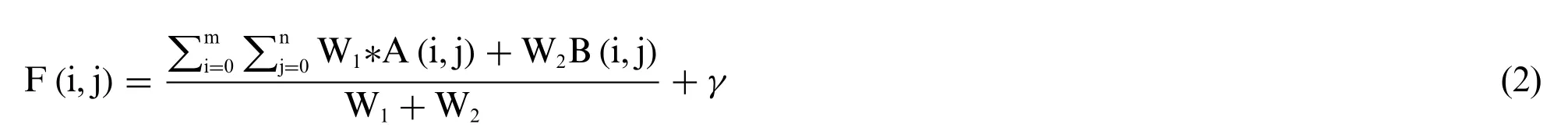

3) Utilize a weighted average in fusion.The fused image is obtained by calculating the weighted value of each pair of input images at the corresponding position with an added fixed scalarγ,as follows:

where A(i,j)is the thermal image and B(i,j)is the corresponding visible image.W1and W2are the weights allocated to A(i,j)and B(i,j),respectively.γis a fixed scalar.In our experiment,we specified the W1,W2and gamma equal to 0.5,0.5 and 10 accordingly.

This strategy changes the signal-to-noise ratio of the fused image and weakens the images’contrast.Some valuable signals appearing in only one of the pair images will lose,which forms a challenge.

4) Utilize the PCA(Principal Component Analysis)algorithm as a strategy in fusion by swapping the first component of the visible image with that of the corresponding thermal image,as follows:

where P1(I,0)is the first component of thermal image and P2(I,j)is the corresponding visible image without the first component.

The strategy reduces the image resolution and some information on spectral characteristics of the first principal component in the original images will be lost.This also forms a challenge.

2.2 IR3C with a Triplet Loss

Compared to general performance metrics,IR3C [136] is much more effective and efficient in dealing with face occlusion,corruption,illumination,and expression changes,etc.The algorithm could robustly identify the outliers and reduce their effects on the coding process by assigning adaptively and iteratively the weights to the pixels according to their coding residuals.

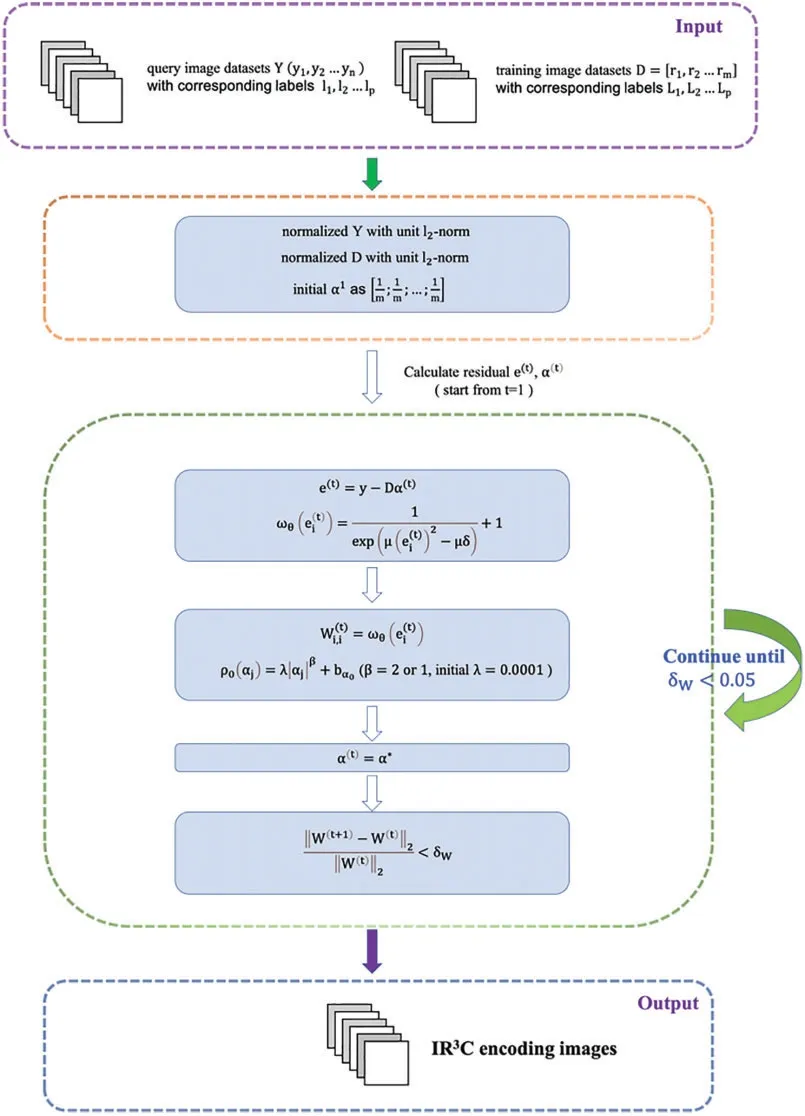

Details for computing the residual value and update the weights are summarized in Fig.1.

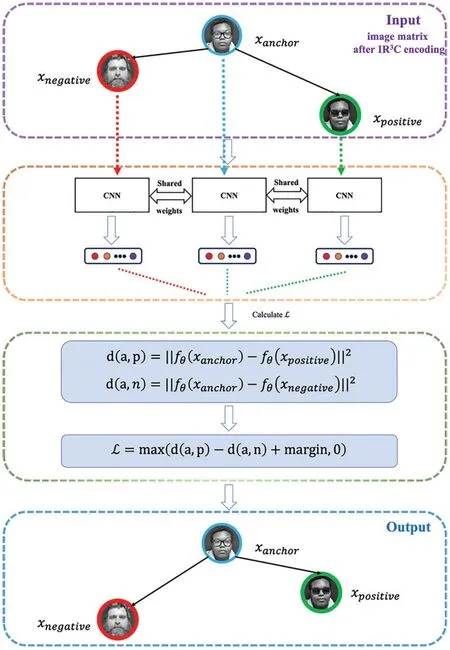

We improve the IR3C algorithm with a triplet loss[137]to tackle the above challenges,as shown in Fig.2.

As shown in Fig.2,the strategy of triplet loss is minimizing the loss among similar images(anchor images and positive images) and maximizing the loss among dissimilar images in Euclidean space.Margin is a threshold parameter to measure the distance between similar and dissimilar image pairs.In our experiments,we set margin as 0.2.

Figure 1:Description of IR3C algorithm.W(t) is the estimated diagonal matrix.δ is the parameter of demarcation point and μ controls the decreasing rate of weight from 0 to 1,we initialized δ as 0.6 and μ as 8.Then they will be estimated in each iteration

Figure 2:Triplet loss on a pair of positive face and one negative face(in the purple dotted rectangle),along with the mechanism to minimize the loss(in the green dotted rectangle)

2.3 IR3C with Broad Learning System

Broad Learning System(BLS)was proposed by C.L.Philip Chen to overcome the shortcomings of learning in deep structure [138].BLS shows excellent performance in classification accuracy.Compared to deep structure neural network,BLS learns faster.Therefore,we improve the IR3C algorithm with Broad Learning System to tackle the above challenges,as shown in Fig.3.

Figure 3:Broad Learning System with increment of input data (images after IR3C encoding),enhancement nodes,and mapped nodes.Here,Am,n means the original network contains n groups of feature nodes and m groups of enhancement nodes

3 Experiments and Discussion

3.1 Model Performance of IR3C with BLS

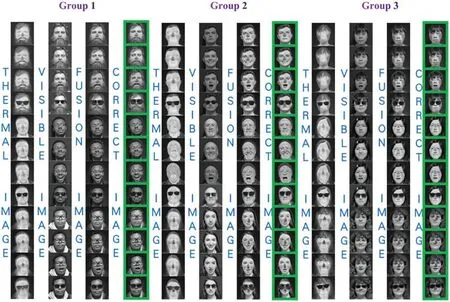

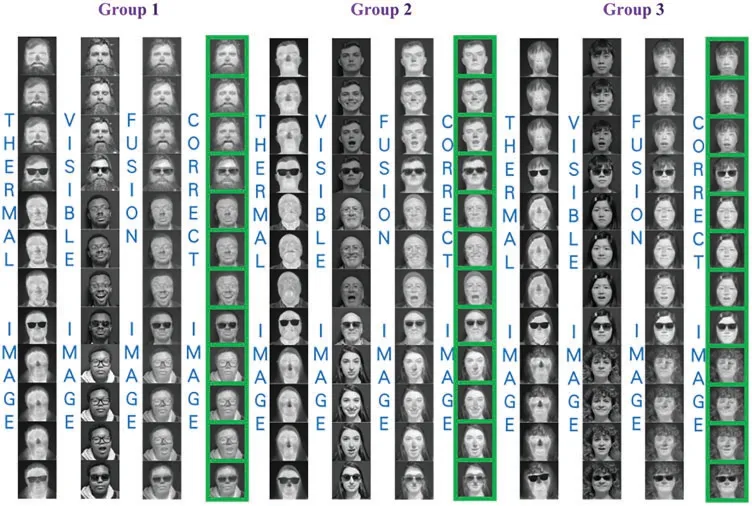

We employed four fusion methods to challenge our models.In each fusion method,we will display 36 results and divided them into three groups.In each group,the first column is thermal images,the second column is visible images,the third column is fusion results of the previous two columns,and the fourth column are correctly recognized images with green borders to distinguish them from the other columns.

Fig.4 shows the experimental results of Select Maximum value,where “CORRECT IMAGE”means the image which is successfully recognized in our model.In this fusion method,the pixel with the most immense gray value in the original image is simply selected as the pixel of the fusion image,and the gray intensity of the fused pixel is enhanced.

Figure 4:The experimental results of Select Maximum value

From Fig.4,we could find that the fusion images have less texture information.Selecting maximum value reduces the contrast of the whole image heavily.The recognition rate of this method is 100%.Compared with pure IR3C in[119],where the recognition rate(93.35%)is lower than ours.It shows that our model can tackle the challenge of information loss.

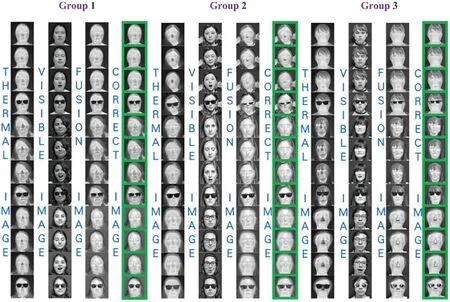

Fig.5 shows the experimental results of select minimum value,where “CORRECT IMAGE”means the image which is successfully recognized in our model.In this fusion method,the pixel with the smallest gray value in the original image is selected as the pixel of the fusion image,and the gray intensity of the fused pixel is attenuated.

From Fig.5,we could find that the fusion images have less texture information.Selecting minimum value reduces the contrast of the whole image.Due to image alignment and clipping,some valuable information is hidden.The recognition rate of our method is 100%,performing better than pure IR3C in[119],where the recognition rate of their experiment is 58.056%.

Figs.6 and 7 show the experimental results of Weighted Average and PCA,respectively,where“CORRECT IMAGE”means the image which is successfully recognized in our model.

Figure 5:The experimental results of select minimum value

Figure 6:The experimental results of weighted average

Figure 7:The experimental results of PCA

The Weighted Average algorithm distributes the pixel values at the corresponding positions of the two images according to a certain weight and adds a constant to get the fused image.In the experiment,we set the weight to 0.5 and the scalar to 10.It is easy to implement and improves the signal-to-noise ratio of fused images.From Fig.6,we found that the fusion images have more texture information from thermal and visible images than the former two methods.It is clear that the weighted average preserves the information of the original images effectively.However,some valuable information has still been weakened in this method.The recognition rate of this method is 100%,which is competitive with the recognition rate of pure IR3C(99.488%)in[119].From Fig.7,we could find that the fusion images keep the vital information from thermal and visible images compared with the select maximum and minimum value.It is clear that PCA preserves the information of the original images effectively.Nevertheless,lots of details have been weakened in this method.The recognition rate of this method is 100%,which is competitive with the recognition rate of pure IR3C(98.721%)in[119].All the results in Figs.4–7 shown that our model has a good performance in dealing with information loss.

3.2 Model Performance of IR3C with a Triplet Loss

We challenged our model with the same four fusion approaches as in Section 3.1.

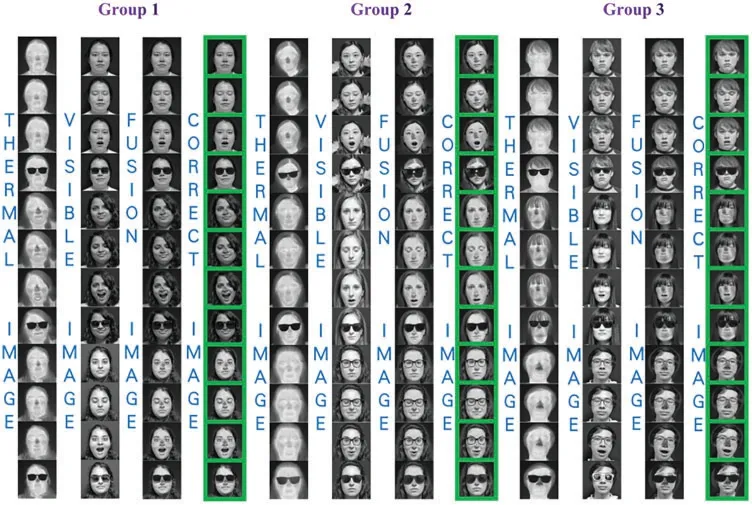

Fig.8 shows the experimental results of Select Maximum value,where “CORRECT IMAGE”means the image which is successfully recognized in our model.

From Fig.8,we could find that the thermal images have less information than visible images.In the fusion process,the value of pixels in thermal image are larger than that in visible image.Hence the fusion images have lost abundance texture information.The recognition rate of this method is 94.61%.Compared with pure IR3C in[119],where the recognition rate(93.35%)is lower than ours.It shows that our model has preponderance in overcoming the challenge of information loss.

Figure 8:The experimental results of select maximum value

Fig.9 shows the experimental results of select minimum value,where “CORRECT IMAGE”means the image which is successfully recognized in our model.

Figure 9:The experimental results of select minimum value

From Fig.9,we could find that the fusion images have lost some texture information.Selecting minimum value reduces the contrast of the whole image heavily.The recognition rate of our method is 94.61%,performing better than pure IR3C in [119],where the recognition rate of their experiment is 58.056%.It indicates that our model has improved the recognition rate greatly by solving the information loss.

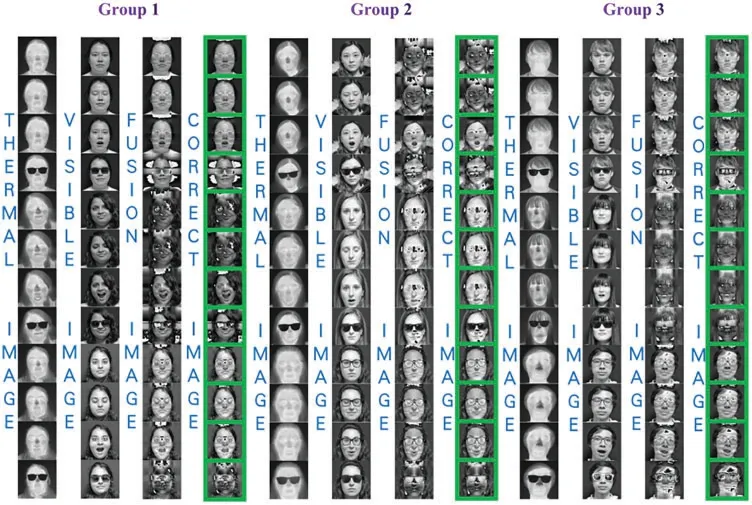

Figs.10 and 11 show the experimental results of Weighted Average and PCA,respectively,where“CORRECT IMAGE”means the image which is successfully recognized in our model.

Figure 10:The experimental results of weighted average

From Fig.10,we found that the fusion images have contained more texture information from thermal and visible images than the former two methods.However,some valuable information has still been weakened in this method.The recognition rate of this method is 96.95%,which is lower than the recognition rate of pure IR3C(99.488%)in[119].From Fig.11,we could find that although the fusion images have maintained the primary information from thermal and visible images compared with the select maximum and minimum value,it still loses some valid information.The recognition rate of this method is 96.23%,which is lower than the recognition rate of pure IR3C(98.721%)in[119].

Despite the less competitive recognition rate of weighted average and PCA,we think the results are receivable.Compared with our previous work in[119],we adopted more images,557 thermal images and 557 visible images are used in present work.All the results in Figs.8–11 shown that our model has a good performance in dealing with information loss.

Figure 11:The experimental results of PCA

3.3 Analyses of the Sensitivity

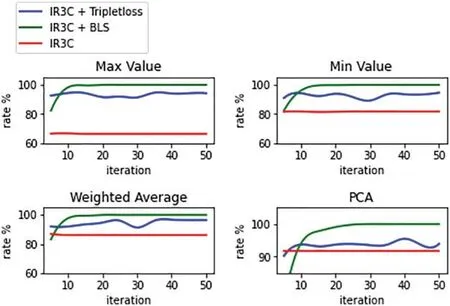

The recognition rates of the above four fusion techniques are shown in Table 1.In order to validate the sensitivity of parameters,we also conducted further experiments on our model.We change the iterations of IR3C,triplet loss,and BLS,from 5 to 50(interval value is 5).After that,we got 10 points in each model and draw the curve graphs in Fig.12.The recognition rates of variant iterations are shown in Fig.12,where we changed the iterations.

Table 1:Recognition rates of each fusion technique

Figure 12:Recognition rates of variant iterations

From Table 1 and Fig.12,we found that PCA and weighted average maintain more valuable information than the other two methods.It is reasonable that PCA and weighted average contain the information from both thermal and visible images.Besides,although the four fusion methods posed challenges to our model,it still performed well.It shows that our model has good robustness for different fusion strategy.We also found that IR3C with BLS and IR3C with triplet loss can achieve high recognition rates in several iterations.Afterwards,the recognition rates maintain in high rates with relative stability.

Fig.12 shows that IR3C has a preponderance in robustness.However,when facing the challenge of information loss,the recognition rate of pure IR3C is not satisfying.After we developed IR3C as IR3C with Broad Learning System in[138]and IR3C with triplet loss,the recognition rates improved greatly.From the curves in Fig.12,we found that IR3C with BLS and IR3C with triplet loss can achieve high recognition rates in several iterations.Afterwards,the recognition rates maintain in high rates with relative stability.

3.4 Competitiveness and Practicability

Most previous studies focused the advantages of image fusion to overcome the shortcoming of a single image and face recognition was majorly based on visible images.Since capturing visible images requires external light,the environment’s illumination may dramatically affect the accuracy of face recognition[139,140].Thermal images,particularly in the Long-Wave InfraRed(LWIR,7 μm–14 μm)and Mid-Wave InfraRed(MWIR,3 μm–5 μm)bands,are utilized to address limitations of the visible spectrum in low-light applications,which capture discriminative information from body heat signatures effectively [141–143].A subsequent challenge is that some valuable information for face recognition is possible to be lost in the fusion process of visible and thermal images.Such fusion is utilized in this study to generate a challenging dataset for face recognition and we proposed IR3C with triplet loss to tackle the challenge in improving the recognition rate of an image in the case of information loss.

Differing from the previous studies,we used relative rough fusion methods(select maximum value in fusion,select minimum value in fusion,utilize a weighted average in fusion,utilize the PCA in fusion) to challenge the two models for face recognition.The corresponding recognition rates of IR3C with BLS are respectively 100%,100%,100%,100%.The corresponding recognition rates of IR3C with a triplet loss are respectively 94.61%,94.61%,96.95%,96.23%.Alternatively,the false alarm rates are 5.39%,5.39%,3.05%,3.77%.It means the PCA fusion and the weighted average fusion maintain more valuable information than the other two methods.This is reasonable.PCA and weighted average contain the information from both thermal and visible images.Selecting the maximum or minimum value in fusion only chooses information of each pixel from one image,which leads to more information loss.

This research is also a continuous work of Wang et al.[119],where we used 391 thermal images and 391 visible images from Tufts Face databases.All the fusion strategies are included in [119],among which three recognition rates after weighted-average fusion,PCA fusion,maximum-value,and minimum-value fusion are 99.48%,98.721%,93.35%,and 58.056%,respectively.Benefitting from the BLS,recognition rates after these four strategies have been improved as 100%,100%,100%,and 100%,respectively.However,in the model of IR3C with a triplet loss,the recognition rates of the presented model after weighted-average fusion and PCA fusion are 96.95%and 96.23%,lower than the previous IR3C model,where the recognition rates are 99.48%and 98.721%.We think it is acceptable we used 571 thermal images and 571 visible images from the datasets.We found that these unrecognized images did not align well,and much valuable information was hidden.Some important information was not selected.It shows that lots of information are lost during the fusion process.

We not only developed IR3C as IR3C with BLS and IR3C with a triplet loss,but also analyzed the sensitivity of the model in further experiments.We altered the iterations of IR3C,IR3C with BLS,and IR3C with a triplet loss.Analyzing the curves of recognition rates,we found that our proposed models can tackle the information loss challenge steadily and reach high accuracy after several iterations.

The model can be further improved in the subsequent studies.During the experiments,we met some difficulties.Selecting hard triplets frequently often makes the training unstable,and mining hard triplets are time-consuming.In fact,it is hard to select.We suggest choosing mid-hard triplets in subsequent studies.IR3C has less requirement on the sharpness of an image.It can be applied to cheap devices with low-resolution.Besides,it performs well in face occlusion,corruption,lighting,and expression changes.We can use it to recognize a face obscured by a mask or in a dusky environment.With the help of BLS,we can train the model quickly.This presented a good basis and so we suggest to further improve the subsequent studies.

Various factors affect visible light face recognition rates,such as expression changes,occlusions,pose variations,and lighting[144–148].Since the Tuft dataset we used in the experiment was collected under good lighting conditions,face pictures under low light conditions were not used in the fusion.Therefore,visible images contain more information than thermal images in this dataset.Previous studies have shown that fusion images can be applied to mask face recognition and low light[148].It shows that image fusion based on thermal and visible images can significantly improve face recognition efficiency.In the next work,we will try to reduce the influence of secondary factors in the experiment.We will use more images from different environments for further research,such as occlusions,low light intensity.Through these subsequent environments,we hope to further explore the robustness of our model.We further analyzed the fusion images with wrong recognized results and found that the CascadeClassifier used to align the face region of different images was not perfect.This also resulted in distinct facial contours of the fused images,which means facial features were changed.Therefore,we will improve the method of image alignment and the fusion strategies in the subsequent studies.

4 Conclusion

In this paper,we propose two models,IR3C with a triplet loss and IR3C with BLS as a model to tackle the information loss challenge in face recognition.To generate a challenging dataset,we use four methods to fuse the images.In the model of IR3C with BLS,the recognition rates after the treatment with four fusion methods are respectively 100%,100%,100%,and 100%.In the model of IR3C with a triplet loss,the recognition rates after the treatment with four fusion methods are respectively 94.61%,94.61%,96.95%,96.23%.Besides,we conducted sensitivity experiments on our two models by constantly changing the iterations of IR3C,BLS,and triplet loss.Experiment results show that our two models,the IR3C with BLS and IR3C with a triplet loss,can achieve good performance in tackling the considered challenge in face recognition.Nevertheless,the Tufts database was obtained under fine lighting conditions without corruption,making visible images contain more information than thermal images in the experiment.Further researches in more complicated environments and conditions are still necessary.This should be a next research priority.

Data Availability: All the data utilized to support the theory and models of the present study are available from the corresponding authors upon request.

Funding Statement:This research was funded by the Shanghai High-Level Base-Building Project for Industrial Technology Innovation (1021GN204005-A06),the National Natural Science Foundation of China(41571299)and the Ningbo Natural Science Foundation(2019A610106).

Conflicts of Interest:The authors declare that they have no conflicts of interest to report regarding the present study.

Computer Modeling In Engineering&Sciences2023年3期

Computer Modeling In Engineering&Sciences2023年3期

- Computer Modeling In Engineering&Sciences的其它文章

- A Consistent Time Level Implementation Preserving Second-Order Time Accuracy via a Framework of Unified Time Integrators in the Discrete Element Approach

- A Thorough Investigation on Image Forgery Detection

- Application of Automated Guided Vehicles in Smart Automated Warehouse Systems:A Survey

- Intelligent Identification over Power Big Data:Opportunities,Solutions,and Challenges

- Overview of 3D Human Pose Estimation

- Continuous Sign Language Recognition Based on Spatial-Temporal Graph Attention Network